Thousands of medical artificial intelligence models demonstrate remarkable accuracy in laboratory settings, yet very few have successfully made the leap into the complex, dynamic environment of a real-world hospital. This persistent gap between potential and practice highlights a fundamental challenge that is slowing the adoption of technologies designed to revolutionize patient care. The issue is not a lack of data or processing power but something far more nuanced: the absence of context. This article explores the critical role context plays in medical AI, answering key questions about why it is the missing link for successful clinical integration and what can be done to bridge this divide. Readers will gain insight into the nature of contextual failures, their real-world impact, and the path forward for developing more intelligent, reliable, and equitable AI systems for healthcare.

Key Questions and Topics

What Are Contextual Errors in Medical AI

The central problem hindering many advanced medical AI systems is the prevalence of contextual errors. These occur when a model produces a recommendation that, while potentially correct in a sterile, data-driven vacuum, is not accurate, relevant, or actionable within the specific, multifaceted context of an individual patient or a unique clinical setting. This is not a minor bug but a systemic weakness that renders many AI tools impractical for frontline use. A model might generate a textbook-perfect diagnosis that completely ignores a patient’s geographic location where a certain disease is rare, the prevailing standards of a particular medical specialty, or crucial socioeconomic factors that heavily influence treatment adherence and outcomes.

These errors stem from a fundamental limitation in the data used to train the AI. Most datasets lack the rich, nuanced information that clinicians instinctively use to make real-world judgments. As a result, the models learn to provide answers that appear sensible on the surface but are practically irrelevant or even counterproductive when applied to a person’s life. The solution requires a comprehensive strategy focused on embedding this missing contextual awareness into the very fabric of medical AI. This involves enriching the training data with real-world variables, developing more sophisticated evaluation benchmarks, and fundamentally redesigning the AI’s architecture to process and adapt to context in real time.

How Does a Lack of Context Manifest in Clinical Practice

The tangible impact of context-blind AI can be seen across various medical scenarios, leading to flawed or incomplete recommendations that could undermine patient care.

The Silo of Medical Specialties

Modern medicine is highly specialized, with clinicians viewing complex cases through the lens of their specific expertise. For example, a patient presenting to an emergency room with both neurological and respiratory symptoms might be evaluated by a neurologist and a pulmonologist, each focusing on their respective organ system. An AI model trained predominantly on data from a single specialty, such as neurology, would likely replicate this siloed approach. It might offer a recommendation based solely on the neurological data, potentially overlooking the critical insight that the combination of symptoms points toward a more complex multisystem disease that neither specialist would identify alone.

To overcome this, the next generation of AI must be trained on cross-disciplinary data. The goal is to develop sophisticated models capable of shifting between different contextual frameworks. Such an AI could synthesize information from multiple specialties simultaneously, identifying the most relevant data patterns for a given clinical picture and providing a more holistic and accurate assessment than any single-specialty model could achieve.

The Importance of Geographic Location

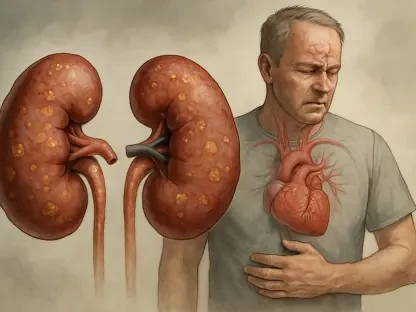

A one-size-fits-all approach to medicine is particularly ineffective in a global health landscape. An AI that provides the same answer to the same clinical question, regardless of whether the patient is in South Africa, the United States, or Sweden, is likely to be wrong in most of those locations. Each geographic region has a unique profile of disease prevalence, approved treatments, available medical infrastructure, and regulatory standards. A clinician’s plan for a patient at risk of organ failure would differ drastically based on these local factors.

To be truly useful on a global scale, medical AI must incorporate geographic data to generate location-specific, and therefore more accurate and actionable, recommendations. Research is actively underway to create models that can dynamically adjust their outputs based on a patient’s location. This innovation is a crucial step toward improving health equity and ensuring that AI-driven advice is clinically relevant and practically applicable everywhere, not just in the regions where the training data originated.

The Influence of Socioeconomic and Cultural Factors

A patient’s life circumstances, including their financial status, social support network, and cultural beliefs, profoundly affect their health behaviors and ability to access care. This vital information is rarely captured in the structured fields of electronic health records (EHRs), which are the primary data source for most medical AI systems. Consider a patient who, after a referral to a specialist, arrives in the emergency department with severe symptoms, having never made the recommended appointment. A standard, context-unaware AI might simply suggest sending another reminder.

This simplistic response fails to address potential underlying barriers, such as the patient living in a remote area, lacking transportation, or being unable to afford time off from work. A truly intelligent model would account for these socioeconomic constraints. Instead of a simple reminder, it might suggest more practical interventions, such as connecting the patient with transportation resources or social services. By incorporating this deeper layer of human context, AI can become a tool for dismantling barriers to care rather than reinforcing existing health disparities.

What Other Challenges Impede Medical AI Adoption

Beyond the core problem of contextual errors, several other significant hurdles must be overcome for the successful and widespread integration of AI into clinical workflows. A primary challenge is establishing trust among patients, clinicians, and regulatory bodies. To build this confidence, AI models must be both reliable and transparent. This means they should provide easily understandable recommendations and, crucially, possess the humility to respond with “I don’t know” when they lack sufficient data or confidence to make a sound conclusion.

Another critical area for development is human-AI collaboration. The current paradigm often resembles a simple chatbot, where a user asks a question and receives a static answer. A more advanced and truly collaborative interface would tailor its communication to the user’s expertise, providing simplified explanations for a patient and detailed clinical evidence for a physician. True collaboration also requires a two-way exchange of information. An AI engaged in a complex task should be able to actively seek clarification or additional data from its human user to refine its analysis and achieve a more accurate outcome.

Summary

The journey to integrate artificial intelligence into medicine is not merely a technical challenge but one that requires a deep understanding of human context. Current models often fail in real-world settings because they lack awareness of critical factors like medical specialty norms, geographic realities, and a patient’s socioeconomic background. Overcoming these “contextual errors” is paramount. The path forward involves enriching training data, creating smarter evaluation methods, and building AI architectures that can adapt to individual circumstances.

Furthermore, successful implementation depends on fostering trust through transparency and developing truly collaborative human-AI interfaces. As these systems become more sophisticated, their potential extends far beyond administrative efficiency. They promise to become dynamic partners in the patient journey, assisting with everything from diagnosis to personalized treatment planning. Achieving this vision requires a responsible, collaborative effort from the entire medical AI community, ensuring that these powerful tools are developed and deployed with a constant focus on improving the quality and equity of patient care.

Final Thoughts

The successful integration of AI into healthcare depended not just on algorithmic power but on the thoughtful inclusion of human context. The realization that a model’s “correct” answer could be practically wrong drove a necessary shift in the field. This led to the development of systems that could account for the intricate variables of a patient’s life, from their zip code to their work schedule, transforming AI from a promising but flawed tool into a reliable clinical partner.

Ultimately, the most significant progress was made when developers, clinicians, and patients collaborated to define what “intelligence” truly meant in a medical setting. It was understood that a responsible AI was one that enhanced, rather than replaced, human expertise and showed humility in the face of uncertainty. The journey demonstrated that technology’s greatest value was realized when it was designed to serve the complex, nuanced, and deeply human reality of medicine.