A comprehensive analysis of the burgeoning conflict over the regulation of artificial intelligence within the American health insurance industry reveals a complex and politically charged landscape where traditional partisan allegiances are fracturing. The core of the debate centers on a fundamental question: Should individual states have the authority to impose safeguards and restrictions on how insurers use AI, or should the federal government preempt such efforts to foster uninhibited technological innovation? This issue has created unusual political alliances, pitting figures like Republican Governor Ron DeSantis of Florida and the Democratic-led government of Maryland, who advocate for state-level controls, against an opposing coalition that includes former President Donald Trump and Democratic Governor Gavin Newsom of California, who favor limiting state intervention.

The New Political Battleground a Tech Turf War

The primary theme driving the push for regulation is a deep-seated public and professional mistrust of both AI technology and the practices of health insurance companies. This sentiment is reinforced by significant polling data. A December poll from Fox News indicated that a substantial 63% of American voters, encompassing majorities from both the Democratic and Republican parties, feel “very” or “extremely” concerned about the rise of artificial intelligence. This widespread anxiety is compounded by a well-documented public frustration with the health insurance sector’s cost-containment strategies. A January poll by KFF highlighted widespread discontent with industry practices such as prior authorization.

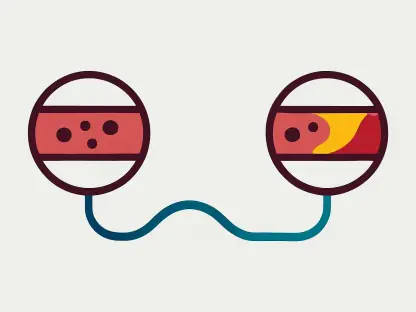

This unease has been further amplified by investigative journalism from outlets like ProPublica, which have exposed instances of insurers allegedly using algorithms to issue rapid-fire denials for insurance claims and prior authorization requests, often with what appears to be minimal or no substantive review by a qualified medical professional. This convergence of concerns has created a fertile ground for regulatory action, with many viewing AI as a tool that could exacerbate existing problems of opacity and unfairness in insurance decisions.

The Twin Crises of Confidence Fueling the Debate

In response to these concerns, a significant trend has emerged: a grassroots, state-level rebellion against the unchecked use of AI by insurers. At least six states have already taken legislative action, with Arizona, Maryland, Nebraska, and Texas passing new laws in 2025, and Illinois and California having enacted similar bills the year prior. The legislative momentum continues, with lawmakers in Rhode Island planning to reintroduce a bill that would mandate data collection on technology use by insurers after it failed to pass both legislative chambers last year.

In North Carolina, a proposed bill requiring that AI cannot be the sole factor in a coverage denial has garnered notable bipartisan interest, particularly among Republican legislators. Governor DeSantis of Florida has also taken a prominent role, introducing a state-level “AI Bill of Rights.” This initiative includes specific provisions to restrict AI’s application in processing insurance claims and mandates that a state regulatory body must have the authority to inspect the algorithms used by insurers, underscoring a commitment to what he terms moral and ethical technological development that aligns with American values.

The Contenders a Two Front War Over Regulation

On the other side of this debate is a powerful pushback from the health insurance industry and a contingent of federal and state leaders who prioritize innovation and a unified national policy. This viewpoint is most forcefully articulated by former President Donald Trump, who has framed the development of AI as a geopolitical “race with adversaries for supremacy.” Through a December executive order, his administration sought to preemptively block most state-level efforts to govern AI, arguing that “excessive State regulation” thwarts the imperative for American companies to “innovate without cumbersome regulation.” The executive order goes so far as to threaten legal action and the restriction of certain federal funds for any state deemed to have enacted “excessive” regulations.

The health insurance industry has echoed this call for a streamlined, national approach while simultaneously working to reframe the public perception of AI. During a House Ways and Means Committee hearing, top executives from major insurers like Cigna and UnitedHealth Group either denied or sidestepped questions about using advanced AI to reject claims. Cigna’s CEO, David Cordani, unequivocally stated that AI is “never used for a denial.” Industry players are actively promoting AI as a beneficial tool for efficiency and improved patient outcomes. For instance, Optum, a subsidiary of UnitedHealth Group, recently announced a new tech-powered prior authorization system, heavily emphasizing its ability to deliver “speedier approvals” and reduce administrative friction. Industry trade groups, such as the Alliance of Community Health Plans and AHIP, have mobilized against state-level bills. They argue that a “patchwork of state and federal laws” creates an undue “regulatory burden” that diverts resources away from patient care. AHIP has advocated for a “consistent, national approach” through a federal framework that balances innovation with patient protection.

Voices From the Front Lines Physicians Lawmakers and Industry Leaders

The medical community has largely thrown its support behind these state-led regulatory efforts. The American Medical Association (AMA), a powerful voice for physicians, has officially endorsed state regulations aimed at increasing accountability and transparency from commercial health insurers. Dr. John Whyte, the organization’s CEO, noted that despite insurers already using AI, physicians continue to grapple with significant problems such as delayed patient care, opaque and inconsistent insurer decisions, and a crushing administrative workload, suggesting that the technology has not alleviated, and may even be worsening, these long-standing issues.

Similarly, New York Assembly member Alex Bores, a computer scientist and proponent of a comprehensive AI governance bill in his state, argues that regulation is a natural and necessary step. He points out that consumers already find insurance company decisions “inscrutable,” and layering on AI—a technology that “cannot by its nature explain itself”—is unlikely to improve clarity or trust.

Navigating the Maze the Legal and Practical Hurdles

The path to effective regulation, however, is fraught with legal and practical challenges. Legal scholars like Daniel Schwarcz from the University of Minnesota have pointed out significant limitations in the current wave of state legislation. A major hurdle is that states lack the authority to regulate “self-insured” employer health plans, which fall under federal jurisdiction and cover a large portion of the American workforce. Furthermore, Schwarcz notes that many of these laws are vaguely worded. While they may require a “human to sign off” on an AI-generated decision, they often fail to specify the depth or nature of that review, creating a risk that human oversight could devolve into a mere rubber-stamping of automated suggestions.

The situation in California under Governor Gavin Newsom illustrates the nuanced balancing act some leaders are attempting. Newsom has signed targeted AI regulations, such as a law requiring insurers to ensure their algorithms are applied equitably. However, he has also vetoed more sweeping legislation that would have imposed stricter mandates on how the technology operates and is disclosed. Observers suggest this approach is a pragmatic effort to protect consumers while ensuring that California, a global hub for the tech industry, does not stifle the innovation that fuels its economy. Finally, the federal preemption strategy championed by the Trump administration faces significant legal questions of its own. Carmel Shachar, a health policy scholar at Harvard Law School, has suggested that the executive order is “possibly unconstitutional.” She argues that the authority to preempt state law generally resides with Congress, not the executive branch, and points out that federal lawmakers have twice considered and declined to pass legislation that would have barred states from regulating AI. This creates a fundamental question about the separation of powers and sets the stage for potential legal challenges. As Assembly member Bores frames it, the current debate is not a choice between federal or state regulation, but rather “a question of, should it be state or not at all?” This encapsulates the central finding: a profound division exists between those who believe states must act as laboratories of democracy to erect necessary guardrails around a powerful new technology, and those who contend that such actions will only fragment and hinder national progress in a critical technological race.