Ivan Kairatov is at the forefront of a revolution in neurological diagnostics, merging the ubiquity of smartphones with the power of machine learning to detect diseases like Parkinson’s earlier than ever before. His team’s recent breakthrough demonstrates how a simple app can analyze subtle motor impairments to predict dopamine deficiency, a key marker of the disease, potentially replacing the need for expensive and invasive brain scans in initial screenings. In our conversation, he unpacked the mechanics of this digital assessment, exploring how it captures data invisible to the naked eye and how its true power is unlocked when combined with traditional clinical expertise. We also touched upon the profound implications for high-risk groups, the current challenges in detecting the disease in its mildest forms, and what the future holds for consumer technology in managing neurodegenerative conditions.

Your research achieved an 85% accuracy in predicting dopamine deficiency by combining smartphone data with clinical scores. Could you walk us through how this digital assessment works in practice and what specific motor functions it measures that a standard clinical exam might overlook?

Absolutely. Imagine a patient, perhaps someone in the early stages of concern, using a familiar device—their own smartphone. The app guides them through a series of simple tasks designed to test things like gait, manual dexterity, and tremor. This isn’t just about whether they can do the task; it’s about how they do it. The phone’s sensors are performing high-frequency sampling of their movements, capturing thousands of data points per second. What this allows us to see are the things a clinician might miss in a standard exam. We’re not just looking for a visible tremor; we’re detecting subclinical tremors—minuscule, almost imperceptible instabilities that are often the earliest whispers of dopamine deficiency. A clinical exam is a snapshot, a valuable one, but this is like having a high-speed camera, revealing the subtle hesitations and inconsistencies in movement that precede more obvious symptoms. That’s the digital edge.

The article mentions the smartphone app uses high-frequency sampling to detect subtle signs like subclinical tremors. Can you provide a few examples of the tasks patients perform on the app and explain how the collected data is translated into a prediction of their DaT scan status?

The tasks themselves are deceptively simple. For dexterity, a patient might be asked to tap two alternating targets on the screen as quickly as possible. For tremor, they might hold the phone steady in their outstretched hand. For gait, they could walk a short distance with the phone in their pocket. During each of these, the phone’s accelerometer and gyroscope are working overtime. They are collecting raw motion data that, to the human eye, would just look like a chaotic squiggle on a graph. This is where machine learning comes in. Our models are trained on data from 93 patients who had both the smartphone assessment and a definitive DaT scan. The algorithm learns to recognize the specific patterns, the “digital signatures,” in that motion data that correlate with a positive or negative DaT scan. It learns what that slight waver in the hand-holding task truly means or how a tiny variation in stride length predicts dopamine loss. The smartphone-only model achieved 80% accuracy, showing just how powerful these digital biomarkers are on their own.

It’s fascinating that the smartphone model alone was comparable to clinical scores, but combining them was most effective. What does this tell us about the unique strengths of each data source, and why is their integration so crucial for achieving the highest diagnostic accuracy?

This is really the core of our findings. It tells us that technology isn’t here to replace clinicians but to augment them. The clinical score, the MDS-UPDRS-III, is an incredibly rich source of information. It captures a seasoned expert’s holistic evaluation of everything from facial expression to posture and speech—nuances a phone can’t yet grasp. That’s its strength: contextual, human-centered assessment. The smartphone, on the other hand, provides objective, quantifiable data at a scale beyond human perception. It replaces subjective observation of, say, a tremor with precise measurements of its frequency and amplitude. When we combine them, we get the best of both worlds. The clinical score provides the “what,” and the digital data provides the “how precisely.” That synergy is what pushed our predictive accuracy up to 85%. It proves that the future of diagnostics lies in this powerful partnership between human expertise and machine precision.

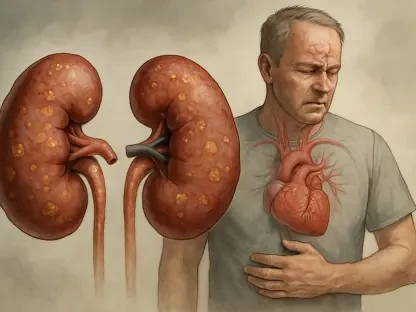

The study highlights that over 60% of people with iRBD show early dopamine deficiency. How do you envision this smartphone tool being implemented for this high-risk group to pre-screen for DaT imaging, and what impact could this earlier detection have on patient outcomes?

This is where I believe the most immediate, life-changing impact lies. People with isolated REM sleep behavior disorder, or iRBD, are in a precarious position; we know a significant portion of them will develop a full-blown alpha-synucleinopathy like Parkinson’s. Today, we often wait for more definitive motor symptoms to appear. With this tool, a neurologist could give their iRBD patient an eight-minute test on a smartphone. The results could help triage who needs an expensive, radiation-based DaT scan immediately and who can be monitored. Getting that early warning—identifying that dopamine deficiency years before it becomes debilitating—is a paradigm shift. It opens a window for potential interventions that could slow or alter the disease’s progression. It moves us from a reactive to a proactive stance, giving patients and doctors a crucial head start in a fight where time is everything.

The models were reportedly less effective for milder Parkinson’s cases. What are the primary challenges in detecting these very early motor changes, and what are the next steps for refining the technology to improve its sensitivity for patients at the very beginning of the disease?

That’s a critical point and a humbling reminder that this is still an evolving field. In the very mildest cases, the motor signs are incredibly faint. The “signal” of the disease is almost indistinguishable from the “noise” of normal human variation in movement. Our current models, trained on a modest dataset, sometimes struggle to pick up on these whisper-quiet signals reliably. The primary challenge is sensitivity. To improve it, we need two things. First, we need more data—far more than our initial 93 participants—to train the algorithms to recognize even more subtle patterns. Second, we need to continue refining the digital tasks themselves to better amplify these early, faint signs. It’s a bit like tuning a radio to catch a distant station; we need to build a better antenna and fine-tune the receiver to isolate that specific frequency.

What is your forecast for the role of consumer technology, like smartphones, in the routine screening and management of neurodegenerative diseases over the next decade?

I am incredibly optimistic. Over the next decade, I forecast that these tools will transition from research curiosities to indispensable parts of the standard clinical workflow. The smartphone will evolve into a personal biomarker device. We won’t just use it for a one-time screening; patients will perform quick, regular check-ins from home, generating a continuous stream of data on their motor function. This will allow clinicians to track disease progression and a patient’s response to medication with a precision we can hardly imagine today. It will democratize diagnostics, making screening accessible far beyond specialized centers. This technology will empower patients, providing them with tangible data about their own health, and it will arm clinicians with the detailed, longitudinal insights needed to provide truly personalized, proactive care for neurodegenerative diseases.