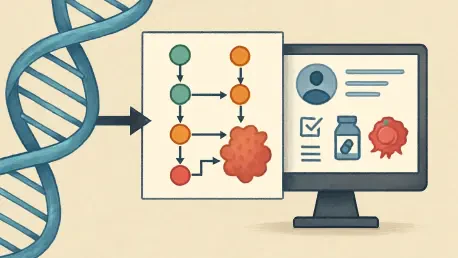

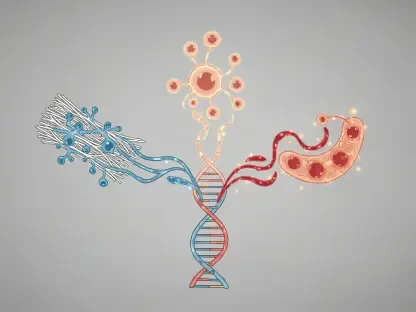

A fundamental shift is underway in the landscape of oncology, where the promise of precision medicine is rapidly becoming a clinical reality, moving treatment from generalized protocols to strategies tailored to an individual’s unique genetic makeup. A pivotal research effort has culminated in the development of an open-source clinical bioinformatics pipeline, a sophisticated framework designed to decode the complex language of cancer genetics. This innovative tool addresses the critical challenge of translating enormous volumes of genomic data from next-generation sequencing (NGS) into clear, actionable insights for clinicians. The primary mission of this pipeline is to bridge the often-daunting gap between raw genetic information and its practical application in real-world patient care, empowering medical professionals to make more informed and effective therapeutic decisions. This advancement represents a significant step forward in making personalized cancer treatment more accessible, standardized, and impactful for patients around the globe.

Bridging the Gap Between Data and Clinical Decisions

The Genomic Data Overload

The core challenge in deploying precision oncology on a wide scale lies in the inherent complexity of cancer, a disease fundamentally driven by an accumulation of genetic mutations. While next-generation sequencing technology provides an unprecedented ability to comprehensively map a patient’s tumor genome, this power comes with a significant challenge: data overload. The sheer volume and intricacy of the generated data create a formidable bottleneck in clinical settings, where timely decision-making is paramount. Clinicians are often confronted with thousands of genetic variants per patient, each requiring careful interpretation to determine its relevance to the disease and potential as a therapeutic target. This analytical process demands a high level of specialized expertise and computational resources that are not always available, creating a barrier to the widespread adoption of genomic medicine.

This deluge of information can lead to what is known as “analysis paralysis,” where the process of sifting through the data to find clinically relevant information becomes so time-consuming and complex that it delays the initiation of appropriate treatment. The risk is that critical therapeutic windows may be missed while waiting for a definitive interpretation of the genomic results. Furthermore, the lack of standardized analytical methods across different laboratories can result in inconsistent findings, complicating a clinician’s ability to confidently act on the information provided. The imperative, therefore, is to develop systems that can not only manage this massive influx of data but also process it in a way that is systematic, reliable, and swift enough to integrate seamlessly into the fast-paced environment of clinical oncology, ensuring that genomic insights translate directly into improved patient care.

A Streamlined Path to Insight

The newly developed pipeline offers a robust and elegant solution to the problem of genomic data overload by providing a streamlined, automated workflow for analysis. By employing a suite of advanced computational tools and validated algorithms, it systematically processes raw data from NGS, moving from millions of genetic reads to a focused list of clinically significant variants. The framework is designed to identify specific genetic alterations, such as mutations, insertions, or deletions, that are known to be associated with a patient’s cancer type and may have implications for therapy selection. This process effectively filters out the vast majority of benign or irrelevant genetic “noise,” allowing clinicians to concentrate on the variants that truly matter. This automation not only accelerates the diagnostic timeline but also enhances the accuracy and consistency of the interpretation, providing a solid, evidence-based foundation for subsequent clinical decisions.

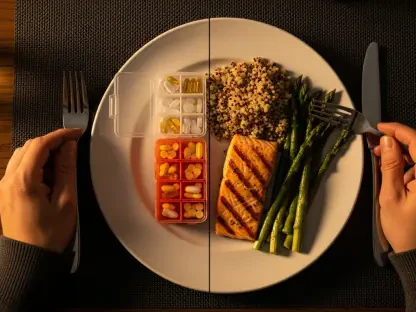

The practical impact of this streamlined approach on the clinical workflow is profound. It transforms the complex, often manual task of genomic interpretation into a more manageable and integrated component of patient care. By providing a clear and concise report of actionable findings, the pipeline empowers oncologists who may not have specialized training in bioinformatics to leverage the power of genomic data. This enables a more dynamic and personalized treatment strategy, where therapies can be selected based on the specific molecular drivers of a patient’s tumor. Ultimately, this fosters a more patient-centered approach to oncology, where treatment is no longer based on broad statistical averages but is instead precisely tailored to the individual’s genetic profile, maximizing the potential for positive outcomes while minimizing exposure to ineffective treatments.

Core Principles Driving a New Paradigm

Fostering Collaboration Through Open-Source Access

A defining and transformative characteristic of this initiative is its steadfast commitment to an open-source model, a principle that stands in stark contrast to the often restrictive and costly proprietary systems that dominate the market. By making the pipeline’s software, source code, and associated tools freely available to the public, the researchers are effectively democratizing access to state-of-the-art genomic analysis technology. This approach dismantles significant financial barriers, enabling a broader range of healthcare institutions, including smaller clinics and hospitals in underserved regions, to implement precision oncology programs without the burden of expensive licensing fees. This inclusive model ensures that the benefits of advanced genomic medicine are not confined to a handful of well-funded research centers but can be extended to a much larger and more diverse patient population.

The open-source nature of the pipeline does more than just lower costs; it cultivates a vibrant and collaborative global ecosystem of innovation. It invites scientists, bioinformaticians, and clinicians from around the world to not only use the tool but also to inspect its inner workings, validate its performance, and contribute their own enhancements and modifications. This collective approach breaks down the traditional silos that can hinder scientific progress, promoting a free exchange of ideas, methodologies, and improvements. Such a dynamic environment accelerates the pace of discovery, as new features can be developed and integrated more rapidly than in a closed, proprietary system. This collaborative spirit ensures that the pipeline remains on the cutting edge of science and technology, continuously evolving to meet the complex challenges of cancer treatment.

Creating a Universal Standard

In the rapidly evolving field of genomic medicine, a significant challenge has been the lack of standardization in how genetic data is analyzed and interpreted. Methodologies can vary widely from one laboratory to another, leading to potential discrepancies in results and, consequently, inconsistent clinical outcomes for patients with similar conditions. This variability not only complicates patient care but also hinders the progress of research, as it makes it difficult to compare findings across different studies and build a cumulative body of evidence. The absence of a uniform approach can erode confidence in genomic testing and represents a major obstacle to its full integration into routine clinical practice. A universally accepted standard is essential to ensure that every patient, regardless of where they are treated, receives the same high level of care based on reliable and reproducible genomic insights.

The new pipeline directly addresses this critical need by establishing and promoting a standardized framework for the analysis of genomic variants. It incorporates a set of clear guidelines and best practices, creating a uniform methodology that can be adopted by institutions worldwide. By providing a consistent and transparent process for interpreting genomic data, the pipeline ensures that clinicians in different settings can apply its findings with a high degree of confidence and reliability. This standardization is fundamental to improving the quality and equity of patient care, as it mitigates the variability that can arise from differing analytical approaches. Moreover, it strengthens the foundation of scientific inquiry in the field. By enhancing the reproducibility of results—a cornerstone of credible research—the pipeline helps to build a more robust and trustworthy evidence base for the future development and application of genomic medicine.

The Technological Edge

Harnessing Artificial Intelligence for Deeper Analysis

The technological sophistication of the bioinformatics pipeline is significantly amplified by the thoughtful integration of machine learning techniques. These powerful algorithms are engineered to perform tasks that go far beyond the capabilities of traditional data analysis methods. Trained on vast and complex genomic datasets, machine learning models can recognize subtle patterns, identify intricate correlations, and uncover crucial associations between specific genetic variants and clinical outcomes that might be imperceptible to a human analyst. For instance, an algorithm could learn to identify complex mutational signatures that predict a tumor’s aggressiveness or its likelihood of responding to a particular class of drugs. This ability to derive deeper meaning from the data moves the analysis from simple variant identification to a more nuanced and predictive level of insight into the tumor’s underlying biology.

The application of artificial intelligence in this context serves to enhance the pipeline’s predictive accuracy and clinical utility. By learning from an ever-growing repository of genomic and clinical data, these systems can continuously refine their predictive models, becoming more adept at forecasting a tumor’s potential behavior over time. This offers clinicians a powerful tool for risk stratification and treatment planning, allowing them to anticipate how a cancer might evolve and to select therapies with a higher probability of success. As this framework continues to mature, the deeper integration of more advanced AI and deep learning models promises to unlock even more personalized and effective therapeutic opportunities. The future of this technology points toward a system that can not only interpret a patient’s current genomic state but also simulate potential treatment responses, heralding a new era of proactive, data-driven oncology.

Validated by Real-World Results

To substantiate its clinical utility and move beyond theoretical promise, the study backing the pipeline presents compelling real-world applications and detailed case studies that demonstrate its tangible impact on patient care. These examples provide concrete evidence of how the framework has been successfully deployed in clinical settings to directly influence patient management. The cases illustrate situations where the genomic insights generated by the pipeline led to significant and beneficial changes in treatment strategies. For instance, the identification of a rare but actionable mutation might have prompted a switch from a standard chemotherapy regimen to a highly targeted therapy, resulting in a more favorable outcome for the patient. By showcasing these positive results, the researchers effectively bridge the gap between complex data analysis and its real-world clinical relevance.

These validated outcomes do more than just prove the pipeline’s efficacy; they also underscore the growing and powerful synergy between the fields of bioinformatics, genomics, and clinical practice. The success stories solidify the value of the pipeline as a practical and indispensable tool for the modern oncologist, transforming genomic insights from an academic curiosity into a cornerstone of day-to-day patient care. The research demonstrates that when sophisticated computational tools are thoughtfully designed and integrated into the clinical workflow, they can provide unprecedented clarity and direction in the fight against cancer. This validation serves as a powerful testament to the pipeline’s capacity to translate complex genetic information into clinically relevant actions, solidifying its position as a vital instrument in the advancement of precision oncology.

A Monumental Step in Personalized Oncology

The development and implementation of this open-source clinical bioinformatics pipeline represented a monumental stride forward in the collective quest to personalize cancer treatment. By dramatically improving the accessibility, standardization, and analytical power of genomic interpretation, this framework held the promise of revolutionizing patient outcomes on a global scale. It successfully addressed some of the most pressing technical, collaborative, and economic challenges that had hindered the widespread adoption of precision medicine. The journey toward fully realizing the potential of genomic-driven care continued, facing ongoing hurdles such as the need for continuous clinician education, seamless integration with existing healthcare IT infrastructures, and the navigation of complex data privacy and security regulations. However, initiatives like this one equipped the medical community with the essential tools needed to confront cancer with an unprecedented level of insight and efficacy, painting a clear picture of an oncology future defined by a data-driven, collaborative, and deeply personalized approach to patient wellness.