Today, we’re thrilled to sit down with Ivan Kairatov, a distinguished expert in biopharma with a profound understanding of technology and innovation in the industry. With a robust background in research and development, Ivan has been at the forefront of exploring how artificial intelligence can transform clinical practice. In this conversation, we delve into the practical applications of AI in healthcare, the critical human factors that influence its integration, and the future of balancing technology with medical expertise. Our discussion uncovers the potential benefits, the hidden pitfalls, and the guiding principles that could shape safer and more effective adoption of AI in patient care.

How is AI currently shaping the way doctors approach patient diagnosis in clinical settings?

AI is playing a transformative role in clinical diagnosis by acting as a powerful decision-support tool. It helps doctors analyze vast amounts of data—think medical images, patient records, or lab results—much faster than traditional methods. For instance, AI algorithms can detect patterns in X-rays or MRIs that might indicate early-stage diseases like cancer, often before they’re visible to the human eye. It’s also being used to predict patient outcomes by analyzing historical data, which can guide doctors in crafting personalized treatment plans. Essentially, AI acts as a second pair of eyes, enhancing precision and reducing the chance of oversight.

What do you see as the most significant advantages of AI in boosting diagnostic accuracy and ensuring patient safety?

The biggest advantages lie in AI’s ability to minimize human error and improve consistency. It can process and cross-reference data at a scale no human could match, flagging potential issues like abnormal test results or subtle signs of disease progression. This leads to earlier interventions, which are often critical for patient outcomes. On the safety front, AI can help standardize care by ensuring that evidence-based guidelines are followed, reducing variability in diagnoses across different providers. It’s like having a tireless assistant that double-checks everything, ultimately saving lives through timely and accurate insights.

What are some of the major challenges or risks you’ve observed with AI implementation in healthcare environments?

One of the primary challenges is the risk of distraction or over-reliance. Doctors might get sidetracked by AI suggestions, especially if they’re presented in a way that’s hard to interpret or integrate into their workflow. There’s also the danger of becoming too confident in AI outputs, which aren’t always correct—algorithms can make mistakes due to biased training data or unexpected variables. Another concern is the potential erosion of a physician’s own judgment; if they lean too heavily on AI, they might second-guess their instincts or lose sharpness in their diagnostic skills over time. These issues highlight the need for careful design and training.

Your research highlights a framework of five key questions for integrating AI into healthcare. Can you walk us through what these guiding principles are and why they matter?

Absolutely. The framework we’ve developed focuses on five critical areas to ensure AI supports rather than hinders clinical practice. First, what type and format of information should AI present to doctors? This matters because cluttered or irrelevant data can overwhelm rather than assist. Second, when should this information be shown—immediately, after an initial review, or on-demand? Timing affects how much a doctor engages with the diagnosis independently. Third, how does the AI explain its reasoning? Transparency helps align AI suggestions with clinical logic. Fourth, how does AI impact bias or complacency? Over-reliance can dull critical thinking. And fifth, what are the long-term risks of dependency on AI? We worry about skill erosion if doctors lean on it too much. These questions are essential to designing AI that complements human expertise.

Why is the way AI presents information so crucial for a doctor’s focus and diagnostic precision?

The presentation of information directly influences how doctors process and act on it. If AI outputs are cluttered, overly technical, or buried in a complex interface, they can distract rather than help, pulling attention away from the patient or other critical data. On the other hand, if the information is clear, concise, and visually intuitive—say, highlighting key findings on a scan with simple annotations—it can sharpen focus and improve accuracy. Poor formatting can also introduce interpretive biases, where doctors might misread or overemphasize AI suggestions. It’s all about making sure the tool fits seamlessly into the clinical workflow.

How does the timing of AI feedback influence a physician’s ability to maintain and develop their diagnostic skills?

Timing is a game-changer. If AI feedback is given immediately, before a doctor has had a chance to form their own assessment, it can bias their interpretation and reduce independent thinking. They might anchor too heavily on the AI’s suggestion. However, if the feedback comes after an initial review, or is toggleable, it allows the physician to engage fully with the case first, using AI as a confirmation or challenge to their conclusions. This approach helps preserve and even sharpen diagnostic skills by encouraging active problem-solving rather than passive acceptance of machine outputs.

Can you elaborate on how transparency in AI decision-making processes can support doctors in their clinical reasoning?

Transparency is vital because it builds trust and alignment between the doctor and the AI system. When AI shows how it arrived at a conclusion—say, by highlighting specific features in an image it considered or explaining why certain data points were weighted heavily—it allows doctors to follow the logic and compare it with their own reasoning. This can spark deeper analysis, like considering alternative diagnoses or questioning the AI’s emphasis on certain factors. It’s almost like having a colleague explain their thought process, which enriches the diagnostic conversation and ensures the doctor remains in the driver’s seat.

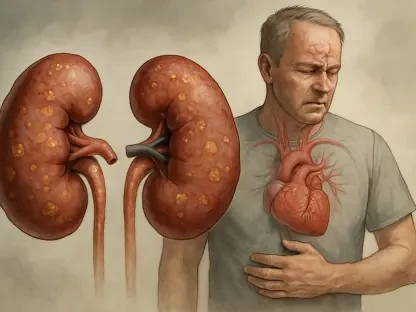

What are the potential downsides of doctors becoming overly reliant on AI, and how might this impact their critical thinking?

Over-reliance on AI can lead to a kind of mental shortcut where doctors stop questioning the technology’s outputs. If they trust AI too much, they might overlook errors or miss diagnoses that fall outside the algorithm’s training data. This complacency can dull critical thinking, as they rely less on their own knowledge and experience. It’s a bit like using a GPS all the time—you might forget how to navigate without it. Over time, this could create a dangerous gap in decision-making, especially in complex cases where human intuition and reasoning are irreplaceable.

Why is it so critical to consider human factors like trust and timing when designing AI tools for medical professionals?

Human factors are at the heart of whether AI succeeds or fails in healthcare. Trust determines if doctors will even use the tool—if they don’t believe in its reliability, they’ll ignore it, wasting potential benefits. Timing, as we discussed, shapes how AI influences independent thinking and skill retention. If these elements are ignored, you risk creating a tool that frustrates users or, worse, leads to errors. Designing with human behavior in mind ensures AI enhances rather than disrupts the delicate balance of clinical judgment, ultimately making it a partner rather than a hindrance.

What steps do you believe are necessary to ensure AI supports rather than overshadows a doctor’s expertise in clinical practice?

First, AI must be designed as a collaborative tool, not a replacement. This means involving clinicians in the development process to tailor interfaces and functionalities to real-world needs. Training programs should also emphasize how to use AI as a second opinion, not a crutch, encouraging doctors to always validate outputs with their own judgment. Additionally, systems should be built to adapt—offering adjustable levels of assistance based on a doctor’s experience or the case’s complexity. Finally, ongoing evaluation in clinical settings is key to spot and fix integration issues before they harm patient care. It’s about creating a synergy where AI and human expertise both shine.

Looking ahead, what is your forecast for the role of AI in clinical practice over the next decade?

I believe AI will become an indispensable part of clinical practice, evolving from a niche tool to a standard component of healthcare delivery. We’ll likely see it deeply embedded in diagnostic workflows, not just for detection but also for predicting patient trajectories and personalizing treatments. However, the real challenge—and opportunity—lies in perfecting the human-AI partnership. I foresee a future where AI systems are smarter about when and how to assist, adapting to individual doctors’ styles and needs. If we get the balance right, addressing trust, skill preservation, and ethical concerns, AI could dramatically elevate the standard of care while keeping the human touch at the center of medicine.