In the rapidly evolving landscape of healthcare and artificial intelligence, Ivan Kairatov stands out as a leading voice, particularly when it comes to understanding the complex intersection of bioethics, law, and technology in the biopharma industry. His extensive experience in research and development offers him a unique vantage point into the challenges and opportunities that arise as AI becomes more integrated into healthcare, particularly concerning the accuracy of race and ethnicity data. Kairatov’s insights are particularly timely, given recent calls in the field for standardizing data collection methods to improve AI system reliability and equity.

What are the main issues with the accuracy of race and ethnicity data found in electronic health records (EHRs)?

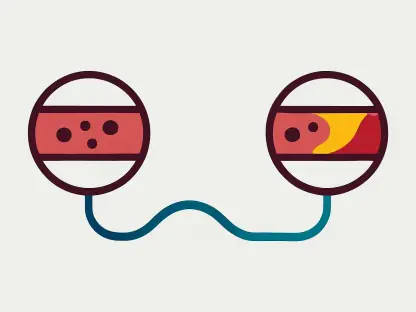

The accuracy of race and ethnicity data in EHRs is frequently compromised by inconsistent collection methods and classification errors. Hospitals often use varied standards, which introduces a significant degree of inaccuracy and inconsistency. This variation can lead to incorrect or incomplete data being fed into AI systems, which then perpetuate systemic issues such as racial bias.

How can inaccurate race and ethnicity data in EHRs impact patient care?

Inaccurate data can lead to a cascade of poor outcomes in patient care. It could result in AI systems making flawed predictions or recommendations, ultimately affecting diagnosis and treatment plans. This is particularly concerning for minority groups, who may already face disparities in healthcare access and quality, exacerbating existing inequities.

Why is the standardization of race and ethnicity data collection important in healthcare?

Standardization is crucial because it ensures consistency and reliability in data across different healthcare systems. By having a unified approach to data collection, healthcare providers and AI developers can make more accurate and fair assessments, reducing the potential for bias. It also facilitates more meaningful comparisons and analyses in research and development, ultimately benefiting patient care.

What are the potential consequences of racial bias being perpetuated by AI systems?

Racial bias in AI systems can undermine trust and exacerbate health disparities. If biases are entrenched within AI tools, this can lead to unjust treatment recommendations or interventions, where some groups receive inferior care compared to others. Such perpetuation of inequity could harm the overall credibility and effectiveness of AI in healthcare.

Can you explain the recommendations made by the authors regarding the collection and disclosure of race and ethnicity data in medical AI systems?

The authors recommend that developers disclose the methodologies used for collecting race and ethnicity data to enhance transparency. They argue that by explicitly stating how data is gathered, both regulators and patients can better evaluate the safety and effectiveness of AI tools. This transparency acts like a warranty for data quality, similar to how nutrition labels offer consumers clarity on the contents of food products.

How can increased transparency in disclosing race and ethnicity data affect the assessment of medical devices by patients and regulators?

Increased transparency allows for a more thorough evaluation of the AI systems’ safety and efficacy. For patients, it provides assurance that the data influencing their care is accurate and responsibly collected, thereby fostering trust. For regulators, it offers a clearer foundation to assess compliance with ethical and legal standards, ensuring devices meet the required benchmarks before reaching the market.

In what way do the authors compare their proposed disclaimers with nutrition labels?

The authors draw an analogy with nutrition labels to highlight how disclaimers could inform stakeholders about the quality and origins of data used in AI systems. Just as nutrition labels provide critical information about what consumers ingest, these disclaimers would clarify the data composition using in AI tools, promoting informed decision-making.

How does Francis Shen view the current state of race bias in AI models, and what concerns does he express?

Francis Shen recognizes significant concerns with race bias in AI models as healthcare technology progresses. He stresses that without concrete measures to address these biases, AI could perpetuate existing inequalities, rather than mitigate them. Shen’s standpoint is a call to action for ongoing research and dialogue to refine AI practices and improve equity.

What steps do the authors suggest to achieve improvements in the accuracy of race and ethnicity data?

The authors suggest implementing standardized data collection protocols, fostering open dialogue among stakeholders, and ensuring that AI system developers disclose their data collection methods. These steps aim to create a more reliable dataset, improve AI tool effectiveness, and maintain accountability within the industry.

Who supported the research, and what role did these organizations play in it?

The research was supported by the NIH Bridge to Artificial Intelligence (Bridge2AI) program and an NIH BRAIN Neuroethics grant. These organizations provided funding and resources necessary for conducting the research, underscoring their commitment to promoting ethical AI practices in healthcare.

According to Lakshmi Bharadwaj, why is an open dialogue about best practices crucial?

Lakshmi Bharadwaj emphasizes that open dialogue is essential because it enables stakeholders to share perspectives, refine practices, and develop innovative solutions collaboratively. Engaging in such discussions fosters understanding and continuous improvement, which are critical for effectively addressing the complexities of bioethics in AI deployment.

Could you explain what the NIH Bridge to Artificial Intelligence program is and how it was involved in the research?

The NIH Bridge to Artificial Intelligence program aims to accelerate the integration of AI into biomedical and behavioral research. Its involvement in the research provided crucial support through funding and collaboration opportunities, facilitating the development of guidelines and strategies for improving race and ethnicity data accuracy.

How might healthcare providers benefit from the standardization of race and ethnicity data?

Standardization can significantly enhance the quality of care provided, as it leads to more accurate patient profiles and treatment plans. With reliable data, healthcare providers can better assess individual patient needs and tailor their care approaches accordingly, reducing health disparities and improving outcomes for all patient demographics.

What are some best practices that healthcare systems and medical AI researchers can adopt to enhance data accuracy?

Best practices include adopting uniform data collection standards, continuously auditing data for quality and biases, and integrating diverse perspectives in the development of AI tools. By committing to these practices, healthcare systems and researchers can ensure that AI technologies are fair, reliable, and beneficial for all patient populations.

What impact could improved race and ethnicity data have on the development of AI-based healthcare tools?

Improved data accuracy would lead to more effective and equitable AI tools, fostering better patient outcomes and reduced bias. It would aid developers in creating more precise predictive models and diagnostic tools, enhancing the overall quality and trustworthiness of AI solutions in healthcare.

Do you have any advice for our readers?

For anyone interested in this field, I encourage staying informed and actively participating in discussions about ethics and data standards in AI. As healthcare technology evolves, it is important for professionals, patients, and policymakers alike to advocate for transparency and equity, ensuring that innovations benefit all sections of society.