At the forefront of pharmaceutical innovation, Ivan Kairatov is reimagining how essential medicines are made. As a leading expert in biopharma, his work merges the precision of continuous flow chemistry with the intelligence of advanced AI and real-time analytics. In a landscape reshaped by global supply chain vulnerabilities, his team’s recent project, PIPAc, stands as a beacon for the future: a fully autonomous, compact, and highly efficient platform for producing active pharmaceutical ingredients (APIs). This initiative not only tackles the technical complexities of modern synthesis but also pioneers a new paradigm for resilient, localized drug manufacturing.

This conversation explores the journey behind this next-generation approach. We will delve into the critical, data-driven decisions that guided the project, such as the strategic pivot from propofol to fentanyl synthesis. We will also uncover the engineering challenges of integrating complex systems from multiple partners into a compact, regulated space and examine how deep reinforcement learning enables the AI to move beyond simple automation to predictive process control. Finally, we’ll discuss the transformative role of in-line NMR analytics in providing the rich, real-time data needed for a truly autonomous system and look ahead to the future of smart pharmaceutical production.

You mentioned abandoning propofol synthesis due to issues with sulfuric acid and catalysis. Could you walk us through the step-by-step process of how your team identified these roadblocks and what specific data led you to pivot the project’s focus entirely to the last step of fentanyl synthesis?

It was a challenging but necessary decision, driven entirely by the data we were seeing in the lab. When we started with propofol, the first red flag was the sulfuric acid. In a continuous flow system, every material has to be perfectly compatible with your equipment, and we immediately ran into issues with our pumps and tubing. Beyond the material science, the chemistry itself was problematic; the stability and solubility of our starting materials were just not cooperating under our reaction conditions. We felt a glimmer of hope when we experimented with a heterogeneous acid catalyst. The initial results were encouraging, but when we looked at the numbers, the conversion rates and selectivity for propofol simply weren’t high enough for an industrial process. Staring at that data, we had to be pragmatic. We pivoted to fentanyl, and even then, we decided to focus solely on the final synthesis step to meet our aggressive timelines and the constraints of the industrial demonstrator’s footprint.

Your team integrated systems from Bruker, Alysophil, and Siemens within a compact 15-square-meter, ATEX-compliant space. Could you share an anecdote about the biggest engineering hurdle you faced and how you successfully used the OPC UA protocol to get these different systems communicating for the first time?

Imagine standing in a space not much larger than a small bedroom, surrounded by a reactor, an NMR machine, and a control system, all needing to operate under strict pharmaceutical and explosive-atmosphere (ATEX) regulations. The physical constraint of that 15-square-meter footprint was immense. The real hurdle, though, wasn’t just fitting the hardware in; it was making these sophisticated systems from different vendors—Bruker’s analytics, Alysophil’s AI, and our Siemens-based control architecture—speak the same language. I vividly remember the moment we were trying to bridge them. It felt like we had a group of brilliant experts in a room, all speaking different languages. The breakthrough came when we fully committed to the OPC UA communication protocol. Seeing the first data packet flow seamlessly from the process sensors, through our Siemens PCS 7 controller, and appear on the WinCC supervisory screen, then get pushed to the AI, was a huge victory. That was the moment it stopped being a collection of parts and became a single, intelligent organism.

The article states your AI uses deep reinforcement learning to predict issues before they impact yield. Could you share a specific example or metric from the digital twin simulations showing how the AI anticipated a process deviation, like a temperature drop, and what corrective actions it took?

This is where our system truly moves beyond traditional automation. We trained our AI agents on a digital twin, running hundreds of simulated production runs to teach it the process dynamics. A perfect example is its handling of thermal fluctuations. In one simulation, we programmed a gradual, subtle drop in the reactor temperature, something that might not trigger an immediate alarm in a conventional system but would slowly degrade the final yield. The AI, having learned the correlation between temperature, flow rate, and conversion from countless runs, didn’t just wait for the temperature to fall below a set threshold. It detected the trend of the temperature drop and predicted its future impact. Before the yield was affected at all, the AI autonomously increased the flow rate of the heating fluid just enough to counteract the drop, stabilizing the system proactively. It’s this predictive capability, the ability to act on what will happen rather than what has happened, that makes this approach so powerful.

You highlighted the Fourier 80 NMR’s ability to quantify multiple analytes simultaneously via the synTQ software. Can you provide a concrete example of how this real-time, multi-attribute data stream allowed the AI or an operator to make a critical process adjustment that wouldn’t have been possible with traditional analytics?

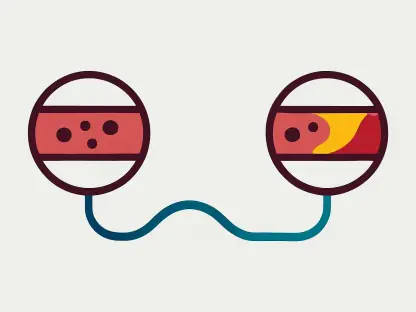

Absolutely. In a traditional setup, you might use a technique like HPLC, where you take a sample, run it for several minutes, and get one data point—say, the concentration of your final product. With the in-line Fourier 80 NMR, we get a complete chemical snapshot every few seconds without ever breaking the flow. In one instance, the NMR data, orchestrated through the synTQ software, showed not only that the fentanyl concentration was steady, but also that the concentration of a key intermediate was beginning to creep up slightly. A traditional method would have missed this nuance entirely. Our AI, however, interpreted this as a sign of slowing reaction kinetics. It immediately made a micro-adjustment to the residence time by slightly tweaking the flow rate, bringing the intermediate back down to its optimal level and preventing a potential drop in overall conversion. That kind of granular, multi-attribute control in real time is a game-changer for process optimization and quality assurance.

What is your forecast for the adoption of fully autonomous, AI-driven flow chemistry platforms in pharmaceutical manufacturing over the next five to ten years?

I believe we are at an inflection point. The next five to ten years will see a significant shift from pilot projects like ours to widespread industrial implementation. The technology—the flow reactors, the in-line analytics like NMR, and the AI—has matured. More importantly, the industry’s mindset has changed, driven by the harsh lessons of the pandemic about supply chain fragility. These autonomous platforms offer unparalleled precision, efficiency, and scalability, but their greatest promise is resilience. I forecast that these smart, self-regulating facilities will become the cornerstone of Pharma 4.0, first for critical and essential medicines, and then expanding across the board. We will move away from monolithic, overseas plants to a network of smaller, more agile, and fully autonomous manufacturing sites that can respond to demand in real time, ensuring that critical medicines are always within reach.