Over 35% of responses from language models regarding addiction questions contain stigmatizing language. This startling statistic highlights a significant issue in AI-driven communication within healthcare. How might such biases, which reflect broader societal prejudices, impact patient trust and healthcare outcomes?

The Power of Words in Healthcare

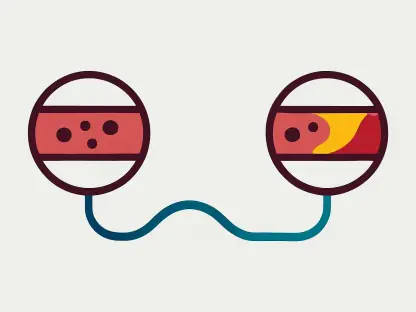

Language plays a crucial role in healthcare interactions, with the potential to strengthen or undermine the patient-provider relationship, ultimately influencing health outcomes. When AI systems, like language models, use stigmatizing terms, they risk damaging trust between patients and healthcare professionals. Such biases in AI are problematic, as they mirror existing societal prejudices and can exacerbate the challenges faced by those seeking care for addiction-related issues.

Uncovering Bias Sources: An In-Depth Look

Language models learn from vast data sets containing everyday language, which often include inherent biases. A case study from Mass General Brigham analyzed 14 language models’ responses to addiction-related queries. The study revealed that 35.4% of these responses included stigmatizing language, emphasizing the urgent need for strategies to address this issue. Prompt engineering—a technique to guide AI responses—emerged as a crucial method, drastically reducing the prevalence of bias when applied.

Expert Insights and Patient Experiences

Medical professionals and researchers highlight the pervasive nature of bias in AI-generated content. The study referenced guidelines from the National Institute on Drug Abuse to assess the impact of such biases. Personal anecdotes from patients who have experienced stigmatization underscore the real-world consequences. These stories shed light on the barriers and challenges patients encounter, reinforcing the need for more empathetic, inclusive language in healthcare communication.

Engineering a Path Forward

Mitigating bias in AI requires deliberate efforts such as prompt engineering to influence language models’ responses. Healthcare professionals play a vital role in screening AI-generated content to ensure it’s free from stigmatizing language before use in patient interactions. Additionally, including patients and families in developing less stigmatizing language fosters a more inclusive approach to addressing addiction, creating a collaborative environment that respects diverse experiences and needs.

Embracing a Responsible AI Future

The findings underscored the critical need for human oversight in curating AI outputs. Balancing technology’s benefits with empathy and sensitivity when discussing addiction could transform healthcare communication. Beyond technical solutions, future strategies involve training AI to detect potential biases and continuously engaging stakeholders in refining AI applications. As the dialogue evolves, redefining how AI contributes to effective, respectful healthcare communication extends the horizon for innovation in patient care.