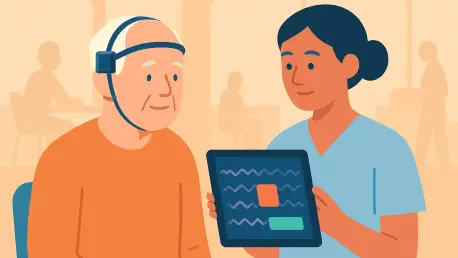

Dementia’s slow drift can feel inevitable for families and clinicians, yet the difference between spotting subtle decline early and recognizing it late often spells a shift from measured planning to crisis response with consequences that ripple through lives and budgets alike. EEG stands out as a noninvasive, relatively low-cost way to capture brain dynamics in real time, but its signals are notoriously complex, full of time-varying rhythms that evade quick visual interpretation. Research from Örebro University and collaborators has zeroed in on that gap by pairing deep learning with rigor around transparency and privacy. The aim is not a flashy algorithm but a workable path: explainable models that reveal which waves matter, trained without moving sensitive data, and compact enough to live on modest hardware in clinics and, eventually, everyday settings.

Why EEG AI for dementia now?

Early identification is not a feel-good slogan; it alters care pathways. When cognitive change is flagged sooner, clinicians can adjust medications, address sleep and mood, and plan support that keeps people safer at home. Traditional routes lean on expensive imaging, specialized expertise, and long waitlists that delay action. EEG offers a pragmatic complement: it is widespread, safe, and fast. The challenge is interpretation, because disease-related shifts are subtle, blend across bands like alpha and beta, and unfold over seconds to minutes. Deep learning excels at finding patterns in those complexities, but two barriers have slowed adoption—opaque behavior that undermines trust, and data centralization that threatens privacy.

Meeting those barriers head-on shifts the question from “Can AI read EEG?” to “Can clinicians rely on it?” Trust hinges on models that justify a classification by pointing to waveform segments and bandwidths that match clinical reasoning. Privacy hinges on training methods that never move raw patient data across institutions, reducing regulatory burdens and breach risk. Efficiency matters, too: hospitals and community clinics cannot bet on heavyweight servers to run every inference. By uniting explainability, federated learning, and lightweight design, the emerging approach reframes EEG AI as a practical tool for screening, triage, and support rather than a lab curiosity, aligning technical progress with health system realities.

Two complementary studies

The first study built an explainable classifier designed to separate Alzheimer’s disease, frontotemporal dementia, and healthy controls—a tougher problem than binary discrimination because symptomatic and electrophysiological overlaps blur boundaries. The model fuses temporal convolutional networks, which stretch across time with dilated filters to capture multi-scale rhythms, and LSTMs, which track longer dependencies that can span across seconds of EEG. Crucially, explanation is embedded: saliency maps reveal which time windows and frequency bands shape the decision, offering clinicians a rationale that can be debated, audited, and taught. Efficiency was also a design goal to keep computation grounded in clinical settings.

The second study took a different angle: protect privacy and enable scale without sacrificing accuracy. A compact network based on EEGNetv4, paired with a hybrid-fusion strategy, was trained through federated learning. Sites keep EEG data local and share only model updates, so the global model improves while raw signals never leave their origin. The result is a sub–1 MB model that fits on edge devices and runs in real time, opening the door to portable or embedded deployments. Together, the two lines of work form a coherent blueprint: one maximizes interpretability for multi-class differentiation, the other maximizes deployability and collaborative training, and both target real-world use.

Inside the models

Under the hood, the TCN+LSTM framework addresses EEG’s dual nature: bursts of activity that carry local signatures and slower evolutions that require memory. Dilated convolutions scan for patterns across multiple temporal scales without exploding compute cost, while LSTMs maintain context to detect long-range dependencies tied to disease states. Built-in explainability grounds outputs in physiology, highlighting contributions from alpha, beta, or gamma activity and flagging specific epochs that sway the verdict. This turns a classification into an evidence trail, useful for clinician review, quality assurance, and hypothesis generation about neural mechanisms that might differentiate AD from FTD.

By contrast, the federated model’s strength lies in parsimony and governance. EEGNetv4’s compact design emphasizes depthwise separable convolutions that reduce parameters while preserving discriminative power. The hybrid-fusion approach blends spatial and temporal cues efficiently, and the training loop operates across institutions without centralizing recordings. Model updates can be encrypted and aggregated, mitigating risks while enabling exposure to varied data distributions that improve generalization. Because the footprint sits under one megabyte, inference can run on laptops, tablets, or small edge modules tethered to EEG headsets, a practical constraint that often derails otherwise accurate but bulky systems.

Results that matter

Performance numbers tell only part of the story, but they set the stage. The TCN+LSTM system surpassed 80% accuracy for three-class classification across Alzheimer’s disease, frontotemporal dementia, and healthy cohorts—an encouraging mark given overlapping symptoms and EEG features that tend to blur lines. The model’s explanations add clinical value by surfacing which frequency bands and time segments carried weight, turning scores into interpretable narratives. That interpretability supports calibration against clinician judgment, helping teams identify where the algorithm aligns with known patterns and where it might be overconfident, a necessary step before deployment in decision support.

The federated, compact model reported accuracy above 97% while keeping data on-premise, a striking combination of privacy preservation and performance. Even if the task framed in that result differs from the three-class setting, the takeaway is compelling: compactness and federated training do not inherently dilute accuracy in EEG-based dementia detection. Efficiency also mattered in practice. A sub–1 MB network lowers latency, reduces power demands, and eases integration with existing EEG systems. In effect, the studies demonstrated that trustworthy, edge-ready AI is not a trade-off against diagnostic signal but a way to bring it to points of care where it can make a difference.

Privacy and real-world deployment

Federated learning changes the collaboration equation by allowing hospitals and clinics to contribute without surrendering raw EEG. Each site trains locally, shares model updates, and participates in aggregations that build a stronger, more generalizable network. This setup can ease compliance, streamline approvals, and encourage participation from partners that would balk at data pooling. It also positions multi-country consortia to grow datasets while aligning with privacy frameworks, a practical necessity for any technology that hopes to leave the lab. Governance matters here: who joins, how updates are vetted, and how models are versioned all shape trust.

On the ground, deployment lives or dies by logistics. The sub–1 MB footprint broadens the range of compatible devices, enabling portable units in outpatient clinics, primary care, and even community centers. That reach could reduce travel burdens, shorten queues for specialist assessment, and enable more frequent check-ins that track change over time. Edge inference also limits reliance on stable high-bandwidth connections, which can be scarce in under-resourced settings. Combined with explainable outputs that clinicians can review and document, the technology slots into existing workflows rather than demanding a wholesale overhaul, a critical factor for adoption at scale.

Limits and what to watch

Any accuracy reported in a research environment rests on specific datasets, protocols, and preprocessing pipelines, so external validation remains a must. EEG quality varies with hardware, montage, and artifact control; patient factors like age, comorbidities, and medications further complicate the picture. Multi-class performance needs to hold across these subgroups, with careful attention to calibration, sensitivity, and specificity tuned to clinical risk thresholds. Explanations illuminate reasoning but do not prove causation. They are tools for scrutiny and learning, not substitutes for clinician judgment or mechanistic proof, and they should be evaluated for stability and faithfulness.

Federated training, while promising, is not a turnkey solution. Real-world sites bring heterogeneity in data distributions, differing protocols, and variable participation that can skew optimization. Communication constraints, privacy budgets, and secure aggregation add engineering overhead. Robust governance—covering consent, auditability, rollback, and incident response—shapes whether collaborations endure. Monitoring for drift and periodically recalibrating models will be necessary as new devices, populations, and protocols enter the mix. Setting realistic expectations will also help clinicians: these tools should spotlight risk and guide next steps, not render definitive diagnoses in isolation.

Implications for care

If validated at scale, edge-ready EEG AI could reshape front doors to memory care. Primary care teams might use short EEG screenings to prioritize referrals, accelerating specialist access for those most likely to benefit. In neurology clinics, explainable classifications could support differentiation between Alzheimer’s disease and frontotemporal dementia, informing which follow-up tests to order and what counseling to provide. Over time, longitudinal assessments might reveal trajectories that signal when interventions should be revisited, moving management from snapshot decisions to trend-aware planning that better fits the way dementia unfolds.

Access and equity sit at the center of this vision. Because EEG is relatively inexpensive and widely available, pairing it with compact AI could bring advanced screening to communities that lack high-end imaging or dense specialist networks. Explainable outputs also help with training, auditing, and shared decision-making, giving clinicians and families clearer windows into why an assessment pointed one way or another. The federated approach invites broader participation in model development, which can diversify data and reduce bias—provided recruitment and validation consciously include underrepresented groups to avoid baking existing disparities into algorithms.

Next steps and clinical path

The research program pointed toward a practical agendscale datasets across institutions, expand feature sets to capture additional oscillatory and connectivity markers, and extend classification to cover vascular and Lewy body dementias. External validation should proceed alongside workflow integration studies that measure time saved, referral quality, and clinician trust. Model cards, version control, and explainability audits will help meet regulatory expectations, while privacy impact assessments and clear governance will sustain federated collaborations. Attention to calibration, subgroup performance, and drift monitoring will prepare systems for the variability of daily practice.

Looking ahead in concrete terms, a staged rollout made sense: pilot deployments in specialist clinics to refine thresholds and explanations; expansion to primary care once calibration stabilized; and exploration of home-based assessments for longitudinal monitoring when device usability and artifact handling proved robust. Partnerships with device makers could streamline integration, and pragmatic trials could quantify outcomes that matter—earlier referrals, reduced wait times, and better care planning. With these steps, explainable, privacy-preserving EEG AI had moved from promise to a credible path toward augmenting dementia screening and support across real-world settings.