The transformative impact of AI-based algorithms on the health care industry offers both immense potential for improving medical outcomes and significant challenges related to bias and disparities. The unexpected discovery by radiologist Judy Gichoya, M.D., and her team that AI could predict a person’s race from radiological images underlines the complexity of these tools. This paradox raises critical questions about the accuracy and fairness of these advanced technologies, considering differences between racial groups are not biologically based and may inherently reflect systemic biases.

Challenges in Local Deployment of AI

The local deployment of AI in health care provides a telling illustration of the intricate issues at hand. A 2021 study revealed that a CT scan-reading algorithm, developed at one particular institution, demonstrated less accuracy for Black patients when applied to data from other medical centers. This result is a clear indication of the challenges posed by dataset diversity and its direct impact on the diagnostic accuracy of AI tools. Such disparities emphasize the need to scrutinize the development and application of these algorithms closely. Medical algorithms must be calibrated based on diverse datasets to avoid faulty analyses and ensure reliability across different patient demographics.

Implications of Diverse Datasets

It’s crucial to consider how these AI systems are trained and the diverse datasets that influence their diagnostic capabilities. When an AI system is trained predominantly on data from a specific demographic, it may struggle to provide accurate predictions or diagnoses for individuals outside that group. This limitation can inadvertently lead to disparities in the quality of care received by different racial and ethnic groups. Therefore, the integration of diverse datasets during the development phase is vital to enhance the AI’s generalizability and effectiveness in real-world scenarios.

Ensuring representational diversity in datasets also helps in developing a robust AI model that can withstand variations in patient demographics and conditions. Nonetheless, the challenge lies in acquiring such diverse data and incorporating it seamlessly into the training processes. Additionally, this approach demands continual refinement and periodic assessment to adapt to new data trends and emerging health concerns. Understanding and mitigating these complexities can pave the way for more equitable health care solutions powered by AI.

Evaluating Algorithm Performance Across Institutions

Evaluating the performance of AI algorithms across different institutions is another critical aspect of addressing disparities. When algorithms, such as the CT scan-reading AI, are trained in a controlled institutional environment, their adaptability to external datasets becomes crucial for their broader application. Real-world deployment means these algorithms must work reliably across multiple settings, treating patients from various backgrounds accurately.

To achieve this, health care providers must engage in rigorous testing and validation of AI tools using extensive multicenter data. Seamless collaboration between different institutions can provide a more comprehensive dataset, enhancing the algorithm’s capability to recognize and diagnose conditions accurately. This cooperative approach ensures that the AI systems are not only effective in developed settings but also in diverse real-world scenarios. Moreover, continuous monitoring and updating of these algorithms are essential to address potential biases that may arise post-deployment.

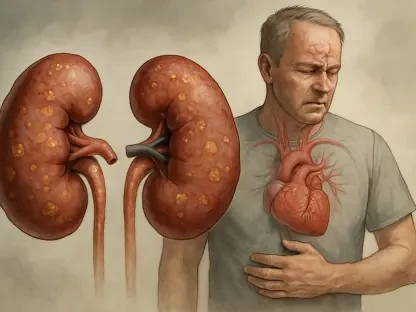

AI in Critical Care

In critical care settings, AI algorithms are increasingly employed to predict disease severity and guide treatment decisions in intensive care units (ICUs). Tools such as MELD and SOFA scores serve as benchmarks for accurate predictions, highlighting the importance of high predictive accuracy in these environments. However, the potential for these AI tools to perpetuate existing biases within the health care system remains an area of concern. The subtle patterns identified by AI systems can inadvertently reflect societal biases, necessitating thorough evaluation to prevent further entrenchment of disparate outcomes.

Accuracy and Bias in High-Stakes Environments

The high-stakes environment of critical care mandates the need for AI algorithms to be both precise and unbiased. In scenarios where timely and accurate decision-making is vital, any form of bias in predictive algorithms can significantly affect patient outcomes. AI tools used in critical care derive a significant portion of their decision-making frameworks from historical data. If this data contains biases, whether racial, socioeconomic, or otherwise, the AI can reinforce and perpetuate these disparities. Ensuring transparent and unbiased data collection and processing methods is paramount.

Ongoing scrutiny and regular updates to these AI systems are necessary to align with the latest clinical guidelines and standards. Moreover, incorporating a diverse range of medical expertise during the algorithm design phase can aid in identifying and mitigating inherent biases. The task involves consistent validation of the AI’s predictive accuracy for various patient demographics to guarantee it serves all equally, without favoring one group over another. Only through such careful oversight can critical care AI tools become reliable allies in medical decision-making.

Evaluating Outcomes and Mitigating Risks

When evaluating AI outcomes, one must also consider the subtle, yet profound, systemic biases within health care structures that AI might reinforce. Given that AIs learn from historical data, any pre-existing disparities entrenched within the data will inherently reflect in AI decisions. This perpetuates the risk of certain patient groups experiencing inferior care pathways due to biased AI diagnoses. Therefore, a meticulous evaluation of outcomes is critical to ensure equity in health care provision.

Mitigation strategies such as algorithmic audits and bias detection tools are essential components in maintaining the integrity of AI operations. Incorporating feedback loops where healthcare professionals can review and counter-check AI suggestions is vital for maintaining high standards of care. Collaborations with ethicists and sociologists can provide broader insights into societal patterns of bias, helping tweak the AI’s decision-making process. By prioritizing these review systems, healthcare professionals can safeguard against the inadvertent perpetuation of bias, ensuring fair and equitable treatment for all patients.

AI’s Role in Medical Training

AI’s influence is extending into the realm of medical education, where large language models (LLMs) like ChatGPT are being increasingly integrated. These tools are being utilized to create comprehensive case studies and interactive simulations for diagnostic practice, providing a dynamic learning environment for medical students. However, studies by Gichoya and her team indicate that AI models like GPT-4 potentially reinforce common biases. This is particularly concerning in contexts where certain diseases are more prevalent among specific racial or gender groups.

Enhancing Learning Environments

The integration of AI into medical training provides students with innovative tools that enhance learning through interactive and practical scenarios. Students can benefit from AI-driven case studies, which simulate realistic clinical situations, aiding in the development of diagnostic acumen. By exposing students to a wide range of scenarios, AI tools like ChatGPT can assist in broadening their clinical knowledge base. However, the presence of inherent biases within these models can lead to disproportionate representations, influencing diagnostic accuracy and decision-making.

Mitigating these biases requires a persistent effort to train AI systems on balanced datasets that accurately reflect the diverse patient population they will ultimately serve. Developing robust feedback mechanisms wherein students can evaluate and challenge AI-driven diagnoses can aid in critical thinking and discernment. Continual updating and refinement of these AI tools based on real-world data and user feedback are crucial to maintaining the educational value while minimizing systemic biases.

Balancing Benefits and Risks

While AI’s application in medical training brings significant educational benefits, it is equally vital to address the associated risks of bias propagation proactively. The reinforcement of biases, especially when related to diseases prevalent in specific racial or gender groups, could lead to over-diagnosing or underestimating risks in certain populations. This creates a scenario where some individuals might receive disproportionate clinical attention, whereas others are neglected, affecting overall health care quality and equity.

To balance these benefits and risks, educators and developers must engage in continuous dialogue and collaboration, assessing AI model effectiveness and potential biases. Awareness and recognition of these biases among medical students are equally important, encouraging a critical evaluation of AI recommendations. Promoting a culture of transparency and continuous learning will help future medical professionals better understand and navigate the complex interplay of AI tools in health care. Ultimately, the goal is to harness AI’s educational capabilities while safeguarding against its pitfalls, fostering a generation of clinicians equipped to deliver unbiased, quality care.

Ensuring Fair and Effective AI Deployment

The broader consensus among experts underscores that while AI holds significant promise for enhancing various facets of medical care, its deployment must be meticulously managed to avoid compounding existing health disparities. Resources such as Nature, Hopkins Bloomberg Public Health magazine, STAT, and NPR all emphasize the necessity for rigorous oversight and continuous refinement of AI tools. This is essential to ensure these sophisticated models do not entrench biases further, causing systemic inequities in patient care to persist and potentially worsen.

Journalistic Oversight and Public Awareness

A key aspect of ensuring fair and effective AI deployment involves vigilant journalistic oversight and raising public awareness about these emerging issues. Journalists play a crucial role in investigating new AI medical tools within local contexts, examining aspects of their development, deployment, and their capacity to introduce or mitigate disparities. Detailed journalistic inquiries can shed light on the intricacies of AI application within health care, bringing transparency to areas often veiled by technical complexities.

Public awareness campaigns can further this cause by educating communities on the implications of AI in health care, empowering them with knowledge to make informed choices about their care options. Greater transparency from health care providers concerning the use of AI tools also fosters trust and confidence among patients. By creating a well-informed public, we set the groundwork for constructive dialogues between medical professionals, AI developers, and the communities they serve.

Future Considerations and Ethical Oversight

The transformative impact of AI-based algorithms on the healthcare industry presents both enormous potential for enhancing medical outcomes and significant challenges related to bias and disparities. Radiologist Judy Gichoya, M.D., and her team made an unexpected discovery: AI could predict a person’s race from radiological images. This finding underscores the complexity of these tools and raises critical questions about their accuracy and fairness. Differences between racial groups are not rooted in biology but may inherently reflect systemic biases. As AI continues to advance in the healthcare sector, it is crucial to address these issues to ensure equitable and unbiased outcomes. Additionally, thorough reviews and continuous monitoring of these technologies are necessary to mitigate any unintended consequences that could exacerbate existing disparities. This way, the immense potential of AI in healthcare can be harnessed responsibly, improving medical outcomes for all demographics without perpetuating inequality or bias.