The sudden loss of speech following a stroke can be a profoundly isolating experience, trapping clear thoughts and urgent needs behind a physical inability to articulate them. For many survivors, the mind remains sharp, yet the connection to the muscles of the throat and mouth is severed, leading to immense frustration. This silent struggle has long been a challenge for both patients and clinicians, with existing assistive technologies often proving slow, cumbersome, or highly invasive. Now, a groundbreaking development from the University of Cambridge offers a new form of hope. Researchers have created a non-invasive wearable device called Revoice, which uses a sophisticated blend of artificial intelligence and sensitive biosensors to translate silently mouthed words into fluent, emotionally aware speech. This soft, flexible, and practical device represents a significant leap forward, aiming not just to give patients a voice but to restore the nuance and spontaneity of natural human conversation.

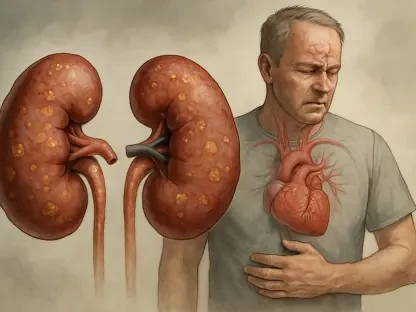

The Silent Struggle of Dysarthria

Dysarthria, a neuromuscular condition affecting approximately half of all stroke survivors, is the central challenge that Revoice seeks to overcome. Unlike aphasia, which can impair language comprehension and formation, dysarthria is a physical impairment that weakens the muscles of the face, mouth, and vocal cords, making clear articulation impossible. Patients often experience slurred or slowed speech or can only manage short, disjointed phrases. As highlighted by the research lead, Professor Luigi Occhipinti, the core issue is not cognitive but a breakdown in the neural pathways that transmit signals from the brain to the throat. This disconnect creates a frustrating reality where individuals know precisely what they wish to communicate but are physically prevented from doing so. The impact on quality of life is significant, creating barriers for patients and immense challenges for their caregivers and families as they try to navigate a world without clear verbal communication.

While conventional rehabilitation offers some recourse, it has its limitations. Traditional speech therapy often involves repetitive word drills and structured exercises designed to strengthen weakened muscles. However, patients frequently find it difficult to apply the skills learned in a clinical setting to the dynamic and unpredictable nature of everyday conversation. The Revoice device was specifically conceived to bridge this critical gap, providing an intuitive and portable solution for those who may eventually recover their speech but require substantial support during their recovery journey. It stands in stark contrast to existing assistive technologies. Many current solutions, such as letter-by-letter input via eye-tracking systems, are slow and laborious, feeling unnatural and cumbersome in practice. Other advanced options require invasive brain implants, a major surgical step that is neither suitable nor necessary for many stroke patients on the path to recovery. Revoice offers a non-invasive, seamless, and real-time communication pathway, turning a few mouthed words into fluent sentences.

Harnessing AI and Biosignals for Speech

The technological foundation of the Revoice device is a sophisticated integration of ultra-sensitive sensors and advanced artificial intelligence, all housed within a comfortable choker-style wearable. When worn, the device is positioned to capture critical biological signals directly from the user’s neck. A primary set of sensors detects the minute vibrations generated by the throat muscles as the wearer silently mouths words, capturing the physical intent of speech without any sound being produced. Simultaneously, the device monitors the user’s heart rate, using pulse signals as a simplified yet effective proxy to infer their underlying emotional state, such as frustration, calmness, or agitation. These raw data streams—the physical intent from vibrations and the emotional context from heart rate—are then processed in real time by the device’s intelligent core. This dual-input system is designed to create a holistic picture of the user’s communication needs, going far beyond simple speech-to-text conversion to capture the unspoken elements of expression.

Powering this innovative system is a dual-agent AI. The first agent is tasked with the complex job of speech reconstruction. It meticulously decodes the subtle throat vibrations, analyzing the patterns to identify the intended words from the silently mouthed speech fragments with a high degree of accuracy. The second AI agent focuses on adding context and emotional nuance to the communication. It analyzes the user’s emotional state, derived from their heart rate, and combines it with contextual information, such as the time of day or ambient conditions. This rich, multi-layered data is then fed into an embedded lightweight large language model (LLM). This LLM, which has been specifically optimized for minimal power consumption to make the device practical for extended daily use, expands the user’s short, mouthed phrases into complete, contextually appropriate, and emotionally expressive sentences. This intelligent synthesis allows the device to generate output that feels natural and truly reflective of the user’s intent.

Validating the Concept and Future Potential

To validate the efficacy of this novel system, the researchers conducted a small-scale trial involving five patients diagnosed with dysarthria alongside a control group of ten healthy individuals. During the study, participants wore the device and were instructed to silently mouth short phrases. A simple and intuitive user interface—a deliberate double nod—allowed them to trigger the LLM, signaling it to expand their mouthed input into a full sentence. The results of this initial trial were remarkably positive and demonstrated the system’s potential. The device achieved a high degree of accuracy, with a word error rate of only 4.2% and an even lower sentence error rate of just 2.9%. This precision is critical for a communication tool intended to be a reliable voice for its user. A compelling example from the study illustrated the device’s unique capability to blend linguistic, emotional, and contextual data. A participant mouthed the three-word phrase, “We go hospital.” The Revoice system detected an elevated heart rate, which it interpreted as a sign of frustration or discomfort. It also registered the contextual fact that it was late in the evening. The embedded LLM synthesized these inputs to generate the far more nuanced and complete sentence: “Even though it’s getting a bit late, I’m still feeling uncomfortable. Can we go to the hospital now?”

The qualitative feedback from the trial was overwhelmingly positive, with participants reporting a 55% increase in their satisfaction with their communication abilities while using the device. This showcased a significant leap beyond simple speech-to-text conversion, demonstrating the device’s ability to facilitate genuinely expressive communication. Looking ahead, the researchers are planning a larger clinical study in Cambridge for native English-speaking dysarthria patients to further assess the system’s viability. While extensive clinical trials are required before Revoice can become widely available, the team has ambitious goals for future iterations. These include incorporating multilingual capabilities, expanding the range of emotional states the device can recognize, and developing a fully self-contained version for practical, independent daily use. The potential applications of this technology also extend beyond stroke rehabilitation to support individuals with other conditions affecting speech, such as Parkinson’s disease and motor neuron disease. The project’s ultimate goal was succinctly captured by Professor Occhipinti, who stated that this is about giving people their independence back, as communication is fundamental to dignity and recovery.