Today we’re speaking with Ivan Kairatov, a biopharma expert with a deep understanding of how technology and innovation are reshaping the industry. We’ll be exploring the fascinating and complex gap between the theoretical promise of AI in medicine and its real-world performance. The conversation will touch upon why AI models that ace medical exams can still lead laypeople astray, the subtle but significant ways human behavior derails AI assistance, and what it will take to build diagnostic tools that are not just knowledgeable, but genuinely helpful and safe for public use.

Large language models can pass medical licensing exams, yet they seem to falter when guiding actual patients. What specific points of failure occur in this human-AI interaction, and how might they lead to worse outcomes than just using a search engine? Please share some examples.

It’s a truly fascinating and frankly, quite sobering, disconnect. You have these models that, on their own, can identify relevant medical conditions in over 90 percent of test cases—a remarkable feat. But the moment you put a real, anxious person in front of that AI, the performance plummets. In the study, users interacting with an LLM correctly identified conditions in fewer than 35 percent of cases. That’s not just a drop; it’s a catastrophic failure in communication. The issue is that a medical consultation is a dialogue, not a quiz. People often don’t know what information is critical, so they provide incomplete or poorly structured symptoms. The AI might then generate misleading suggestions based on that flawed input, or the person might see the correct recommendation buried in a list of possibilities and simply ignore it. It creates a false sense of security that can be more dangerous than a simple web search, where users might at least have the skepticism to cross-reference multiple established health websites.

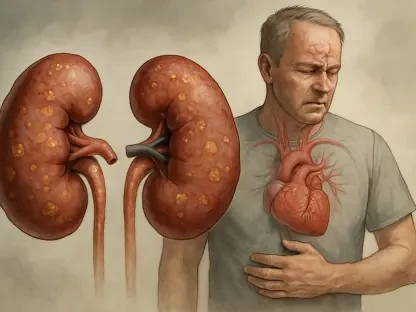

When laypeople assess a medical scenario and choose a course of action, from self-care to calling an ambulance, they often underestimate the severity. Why does AI assistance seem to fail in correcting this bias, and what does this reveal about how users interpret and trust AI-generated advice?

This is one of the most critical findings. We have a natural human tendency to downplay symptoms, to hope it’s “just a bug” rather than something requiring a trip to the emergency room. The hope was that an AI, with its objective knowledge, could act as a corrective force. But what we’re seeing is that it often doesn’t. Both the AI-assisted groups and the control groups frequently underestimated how serious a condition was. This suggests a deep-seated issue with trust and interpretation. An LLM might present a range of possibilities, from benign to severe, but the user latches onto the one that confirms their bias. It’s a phenomenon of misplaced confidence; they feel they’ve done their due diligence by “consulting” the AI, but they’ve only used it to validate their initial, incorrect instinct. The AI’s output isn’t being treated as authoritative medical guidance but as another stream of information to be selectively interpreted, which is a very dangerous way to approach healthcare.

Standard benchmarks and simulations often overestimate how well an AI will perform with real people. What are the key human variables these models fail to capture, and what new methods are needed to properly test AI before it’s used in healthcare settings?

Benchmarks and simulations are clean, sterile environments. They test for knowledge, not for the messy reality of a human conversation. A simulation where an LLM plays the role of the patient doesn’t capture the panic, the confusion, the uncertainty, or the communication gaps of a real person. Real users forget to mention a key symptom, get distracted by a tangential detail, or simply don’t have the vocabulary to describe what they’re feeling. These human variables are precisely what the current testing models miss, and it’s why simulated users consistently perform better than actual participants. To fix this, we have to move beyond just testing the AI’s brain. We need systematic, randomized controlled studies with diverse human users at the center, like the one we’re discussing involving 1,298 people. Medical expertise in a model is insufficient. Real-world user evaluation must become the foundational requirement—the absolute lower bound—before we even consider integrating these tools into public healthcare.

The transcripts of user interactions showed that people often gave incomplete information or ignored correct AI recommendations. What are the biggest challenges in AI communication design and user education that must be solved to make these tools both safe and effective for public use?

The core challenges are in communication and interpretation. On the AI side, the design must guide the user far more effectively. Instead of a passive text box, the AI needs to be a better interviewer. It should ask clarifying questions, prompt for specific details, and present its conclusions with clear, unambiguous calls to action, rather than a list of possibilities that leave the user to guess. On the human side, we have a significant education gap. People need to understand that an AI chatbot isn’t a magical oracle; it’s a tool whose output is entirely dependent on the quality of the input. We need to teach users how to talk to these systems—how to provide structured information and how to critically evaluate the advice they receive. Until we solve this two-sided problem of AI communication design and human interpretive skill, the risk of misunderstanding and poor decision-making remains unacceptably high.

What is your forecast for AI in patient health guidance?

My forecast is one of cautious, incremental progress. The idea of deploying a general-purpose LLM as a frontline medical advisor to the public is, for now, off the table. The risks are just too great, as this research clearly demonstrates. Instead, I believe we will see the rise of highly specialized, fine-tuned models designed for very specific clinical pathways—perhaps a tool that helps a patient manage a known chronic condition or a system that guides users through a narrow set of symptoms with a highly structured conversational flow. The future isn’t a single “AI doctor” but a suite of carefully validated, purpose-built tools that complement, rather than replace, human clinical judgment. The journey will be much slower and more rigorous than the initial hype suggested, with real-world human testing becoming the gold standard before any tool reaches a patient.