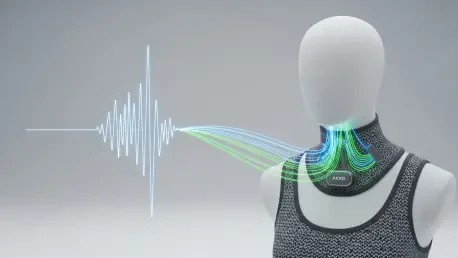

We’re joined today by Ivan Kairatov, a biopharma expert whose work at the intersection of technology and research is pushing the boundaries of what’s possible in patient care. We’ll be exploring a groundbreaking AI-powered wearable that translates silent throat movements into fluent speech for stroke survivors. Our discussion will delve into how this device uniquely captures both speech and emotion, its innovative near-real-time processing that avoids the stilted pace of older technologies, and the remarkable precision it achieves. We’ll also touch on the sophisticated AI that not only deciphers a user’s intent but can also enrich it, transforming fragmented inputs into socially nuanced communication.

This intelligent throat system uniquely combines laryngeal muscle vibrations with carotid pulse signals. What specific communication challenges does this dual-sensor approach solve compared to systems that only track muscle movement, and how does it help generate more emotionally tuned speech for patients? Please detail this process.

It’s a fantastic question because it gets to the very heart of what makes this technology feel more human. Systems that only track muscle movement are essentially just transcribers; they capture the “what” of speech but completely miss the “how.” Communication is so much more than words—it’s tone, it’s feeling. By integrating the carotid pulse signal, we’re tapping into the body’s physiological response to emotion. The device simultaneously decodes the laryngeal vibrations for the content of the speech and the pulse signals for the emotional context. This allows the AI to synthesize speech that isn’t just a flat, robotic recitation but one that carries the intended emotional weight, whether it’s frustration, relief, or a neutral state. It bridges that emotional gap that leaves many patients feeling disconnected, even when they can technically form words with other devices.

Many alternative communication devices decode speech word-by-word, which can feel slow and unnatural. Your system uses token-based decoding, analyzing signals in roughly 100-millisecond chunks. How does this method create a more continuous and fluid communication experience, and what technical hurdles did you overcome to achieve this near-real-time processing?

The shift from word-level to token-level decoding is a game-changer for conversational flow. Imagine trying to have a conversation where you have to wait for each word to be fully processed before the next one can begin—it’s incredibly disjointed. By breaking the signal down into tiny, 100-millisecond tokens, we’re essentially mirroring the continuous nature of natural speech. The system processes these chunks in a stream, allowing it to assemble sentences fluidly. The main technical hurdle was latency. Processing this much data in near real-time is computationally expensive. We implemented a technique called knowledge distillation, which essentially trains a smaller, more efficient model to mimic a larger, more complex one. This single step reduced the computational load and latency by about 76%, all while keeping the accuracy at an impressive 91.3%. It’s this efficiency that makes a smooth, continuous output possible, with a total response time, including speech synthesis, in the order of seconds.

The system can differentiate between three emotional states: neutral, relieved, and frustrated. What makes the carotid pulse a reliable indicator for these specific emotions, and how does the device’s design isolate this signal from the “crosstalk” of nearby throat muscle vibrations?

The carotid pulse is a wonderful physiological indicator because it’s directly influenced by the autonomic nervous system, which governs our fight-or-flight responses and emotional states. When we feel frustrated or relieved, our heart rate and blood pressure change in subtle but measurable ways, and those changes are reflected in the carotid pulse. The real engineering challenge was preventing the powerful vibrations from the laryngeal muscles from drowning out these faint pulse signals. It’s like trying to hear a whisper in a loud room. To solve this, we developed a stress-isolation treatment using a specialized polyurethane acrylate layer. This layer is integrated into the choker and acts as a dampener, effectively filtering out the muscular “noise.” This seemingly small addition was crucial, improving the signal-to-interference ratio by more than 20 dB and allowing our emotion-decoding network to achieve an 83.2% accuracy.

Beyond direct translation, the system features an LLM for error correction and optional sentence expansion, which reportedly boosted patient satisfaction by 55%. Could you describe how this expansion mode works in practice and provide an example of how it transforms a patient’s brief input into a fuller, more socially appropriate expression?

This feature is about reducing the patient’s cognitive and physical load. A patient who is fatigued might only have the energy to mouth a couple of key words, like “water, please.” In direct translation mode, that’s exactly what you’d get. But with the sentence expansion mode activated, the LLM takes that core input and enriches it. It pulls in contextual data—like the time of day or the weather, retrieved from a local interface—and the detected emotional state. So, “water, please,” mouthed with a neutral emotional state in the morning, might be expanded to, “Good morning. Could I please have a glass of water?” This not only sounds more natural and polite but also saves the patient from having to painstakingly form every single word. That 55% jump in patient satisfaction really underscores how much this feature helps restore a sense of normalcy and ease to their interactions.

Training a model on a small group of five stroke patients presents unique challenges. What were the key steps in adapting the model from healthy subjects to patients with dysarthria, and what measures ensure the system remains accurate despite issues like motion artifacts or background noise?

Working with a small patient cohort requires a very deliberate and strategic approach. You can’t just throw raw data at the model and hope for the best. Our first step was to build a strong foundation by training the initial model on a larger dataset from 10 healthy subjects. This taught the model the fundamental patterns of silent speech. Then, we moved to the fine-tuning stage with the five patients. The key here was adapting the model to the specific, often less distinct, neuromuscular signals of individuals with dysarthria. Critically, we made the decision not to clean up the data too much. We intentionally kept signals that included motion artifacts or background noise. It seems counterintuitive, but this forces the model to become more robust and generalize better to real-world conditions, where perfect, clean signals are a rarity. This approach is a major reason the system can function so reliably outside of a controlled lab environment.

Given the system’s low word error rate of 4.2% and its ability to distinguish between visually similar mouth shapes, what were the most significant breakthroughs in signal processing or machine learning that made this level of precision possible for a non-invasive wearable device?

Achieving that 4.2% word error rate in a non-invasive wearable comes down to a synergy of several breakthroughs. On the hardware side, the development of ultrasensitive textile strain sensors was paramount; without a high-quality initial signal, the best software in the world can’t work miracles. On the machine learning front, the token-based decoding network was a huge leap forward, as it allows the model to learn the nuances of speech flow rather than just isolated words. But I think the most significant breakthrough was our ability to reliably differentiate between articulatorily similar word pairs. We achieved 96.3% accuracy here, which is remarkable. This precision comes from a deep learning model that doesn’t just look at a signal at one moment but considers the context of the preceding 14 tokens. This contextual awareness allows it to discern the subtle differences in muscle activation that distinguish, for example, two words that look almost identical when mouthed.

What is your forecast for wearable silent-speech technology?

I believe we’re on the cusp of this technology becoming a seamless, integrated part of life for those with speech motor disorders. The forecast isn’t just about incremental improvements in accuracy; it’s about personalization and integration. In the next five to ten years, I expect these devices to move beyond a predefined vocabulary and learn a user’s unique speech patterns and personal lexicon in real time. We’ll see them integrate with smart home devices, personal assistants, and communication apps, allowing a user to not just talk to a person in the room but to control their environment and connect with the world, all through silent speech. The ultimate goal is to make the technology so intuitive and responsive that it becomes an invisible extension of the user’s own will to communicate.