The pharmaceutical industry is in the midst of a monumental technological pivot, committing billions of dollars to the promise of artificial intelligence agent platforms in a bid to revolutionize its long-standing operational models. Spearheaded by landmark collaborations like the global partnership between Novartis and Salesforce, this trend represents a strategic gamble by “Big Pharma” to conquer decades of inefficiency. The core ambition is to deploy sophisticated AI agents that can finally unify vast, fragmented data streams and streamline every process from the research lab to the sales field. However, this high-stakes endeavor places the immense potential of AI in direct conflict with the unforgiving, highly regulated landscape of the life sciences, revealing a considerable gap between the industry’s ambitious vision and the current, real-world capabilities of the technology.

The Foundational Challenge: Reconciling AI’s Promise with Pharma’s Reality

The Drive for Data Unification

At the very heart of the pharmaceutical industry’s aggressive push into artificial intelligence lies the persistent and costly problem of data silos. For decades, major pharmaceutical companies have operated as a collection of disconnected informational islands, with vast and valuable data pools accumulating separately across clinical operations, medical affairs, commercial sales, and patient support services. This deep-seated fragmentation has long been a major impediment, crippling operational efficiency, hindering collaboration, and preventing the emergence of a holistic, 360-degree view of the business. The primary promise of advanced platforms like Salesforce’s Agentforce is to finally bridge these divides. The goal is to architect a unified data model that enables a seamless orchestration of information across the entire drug lifecycle. This would transform the traditional Customer Relationship Management (CRM) system from a passive “system of record,” which relies heavily on manual data entry, into a dynamic and proactive “system of insight” capable of generating intelligent, data-driven recommendations that could accelerate decision-making and unlock new efficiencies from clinical trial recruitment to post-market surveillance.

The Probabilistic vs. Deterministic Dilemma

A fundamental tension, however, threatens to undermine this transformative vision: the inherent conflict between the nature of generative AI and the stringent requirements of the pharmaceutical industry. Modern AI models are fundamentally “probabilistic,” meaning they are designed to generate outputs that are statistically likely or plausible-sounding based on the data they were trained on, not to produce results that are guaranteed to be factually correct. This characteristic becomes a critical liability in a field where “deterministic” outcomes—absolute accuracy, unwavering consistency, and strict adherence to complex legal and safety protocols—are not just preferred but are non-negotiable mandates. The unyielding demand for certainty in areas like drug safety reporting and regulatory compliance makes the inherent unreliability of probabilistic AI the single greatest obstacle to its deployment in the industry’s most high-stakes functions. A single error, misinterpretation, or “hallucination” by an AI agent in these contexts could lead to severe patient harm, significant regulatory penalties, and catastrophic legal consequences, making this a high-wire act with no room for error.

From Hype to Cautious Optimism

Reflecting the immense challenges of implementation, the narrative surrounding AI agents within the life sciences sector is undergoing a significant maturation, shifting from a period of unbridled hype to one of sober realism. The initial rhetoric, often championed by tech executives, painted a picture of easily built, fully autonomous agents capable of independent decision-making and complex task execution. However, as the industry moves from theory to practice, this optimistic narrative has been tempered by a more nuanced and cautious perspective. Today, industry leaders and tech providers alike more commonly describe these sophisticated tools as being “interns at best,” a clear acknowledgment that while powerful, they require constant supervision and guidance. The emerging consensus is that the large language model (LLM) alone is profoundly insufficient for reliable enterprise use. A successful deployment hinges on the construction of a robust and resilient infrastructure built on pillars of trusted data, rigorous governance frameworks, and unwavering human oversight to ensure that the technology operates safely and effectively within the rigid confines of the pharmaceutical world.

AI in Action: Current Applications and Future Aspirations

Practical Gains in Low-Risk Areas

In the immediate term, the most practical and widespread applications of AI agents are focused squarely on boosting efficiency in lower-risk, administrative-heavy domains where the cost of an error is minimal. These early use cases serve as a crucial proving ground for the technology while delivering tangible productivity gains. For instance, a sales representative can now verbally dictate detailed notes following a meeting with a healthcare provider, and an AI agent can instantly process the audio, draft a structured summary, and log it correctly within the CRM system, saving hours of tedious manual data entry each week. Similarly, these intelligent systems can be programmed to analyze communications and flag potential medical inquiries mentioned during interactions, prompting the representative to follow the proper logging and escalation procedures. In the realm of clinical research, AI agents are also beginning to automate monotonous but critical tasks in study management, such as identifying suitable trial sites based on complex criteria and scanning patient records to find eligible candidates, thereby accelerating the slow and costly process of clinical trial recruitment.

The High-Stakes Frontier: Compliance and Safety

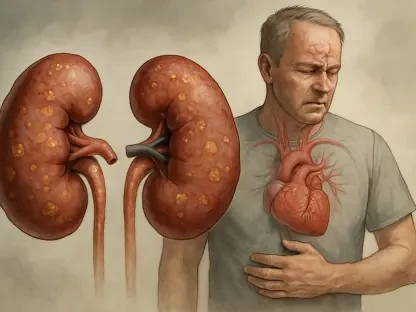

The most transformative and highly anticipated application for AI agents in pharmaceuticals remains tantalizingly out of reach: real-time compliance monitoring, particularly in the critical area of adverse event detection. The ultimate vision is for an AI to function as a “compliance officer in the background,” capable of listening to and analyzing conversations between sales representatives and physicians in real time. This AI would be trained to identify specific language or phrases that suggest a potential patient safety issue, immediately prompting the representative to report the incident through official, regulated channels. However, this critical functionality remains aspirational, held back by the same probabilistic nature of AI that makes it unreliable for deterministic tasks. The risk of an AI agent failing to detect even a single adverse event is simply too high, given the severe legal, financial, and regulatory fallout that would inevitably follow. Until the technology can guarantee near-perfect accuracy and reliability, this high-stakes frontier will remain a long-term goal rather than a present-day reality for the industry.

The Hidden Costs: Customization and Governance

Deploying enterprise-grade AI agents is a far more complex and resource-intensive endeavor than a simple “plug-and-play” software installation. While platform providers like Salesforce offer foundational capabilities and pre-built modules tailored for the life sciences, companies such as Novartis must still invest heavily in extensive customization to adapt these agents to their unique operational workflows, internal data structures, and distinct corporate culture. This process of tailoring the AI’s “flavor” is only the beginning. More importantly, large enterprises are required to build their own comprehensive governance and compliance frameworks on top of the platform’s existing guardrails, such as role-based data access and data masking layers. This involves developing intricate systems for monitoring AI behavior, validating its outputs, and creating audit trails to ensure every action the AI takes is traceable and defensible. This complex, costly, and continuous process underscores the reality that a successful AI strategy is built not just on a powerful algorithm but on a deep and unwavering commitment to meticulous oversight and control.

An Industry at a Crossroads

The pharmaceutical industry’s significant financial commitments to AI platforms marked a definitive strategic direction. Companies across the sector, from Novartis to AstraZeneca, have made a clear bet that this technology holds the key to solving fundamental problems of data fragmentation and operational inefficiency that have persisted for generations. The long-term vision is to create a more streamlined, intelligent, and responsive ecosystem, ultimately enhancing the way the industry interacts with healthcare providers and serves patients. However, the journey has revealed a landscape fraught with technical hurdles and regulatory complexities. The fundamental conflict between AI’s probabilistic nature and the life sciences’ deterministic needs has created a formidable barrier, particularly for the most impactful applications in compliance and safety. The path forward has proven to be a long-term endeavor, defined by the difficult but necessary work of building intricate layers of governance and customization to ensure that these powerful new tools will play by the industry’s unyielding rules, every single time.