A profound transformation is sweeping through the halls of biomedical research, driven by the powerful convergence of artificial intelligence and large-scale genomics. This is not merely an incremental improvement on existing methods; it represents a fundamental paradigm shift, moving science from traditional hypothesis-driven inquiry to a new era of data-driven discovery. At the forefront of this revolution, institutions like MIT’s Schmidt Center are pioneering novel strategies to bridge the chasm between complex computational models and tangible clinical applications. The ultimate ambition is to dramatically accelerate the development of targeted, personalized therapies and reshape our very understanding of human disease, turning massive datasets into life-saving insights.

A New Era of Data-Driven Discovery

Shifting the Scientific Paradigm

For centuries, the scientific method in biology has followed a well-trodden path: a researcher formulates a specific hypothesis, designs experiments to test it, and then analyzes the results. This reductionist approach has yielded incredible discoveries but is often limited by the scope of the initial question. Today, that entire process is being inverted. The current revolution is defined by the ability to first generate unprecedented volumes of high-resolution biological data, observing entire biological systems in their full complexity, and only then generating hypotheses from the patterns that emerge. This data-first methodology allows scientists to ask questions they didn’t even know to ask, uncovering novel drug targets and understanding intricate disease mechanisms that would likely be missed by narrower, conventional research methods. This reversal is critical for tackling multifaceted diseases like cancer or neurodegenerative disorders, where countless interacting variables contribute to pathology. By letting the data lead, researchers can build a more holistic and accurate map of disease, moving beyond single-gene or single-pathway explanations to embrace the true complexity of human biology.

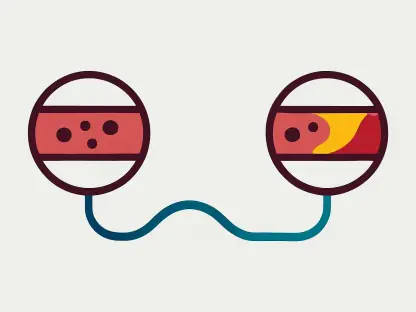

The technologies fueling this paradigm shift are capturing biological processes at a scale and resolution that were unimaginable just a few years ago. One of the most powerful tools is single-cell genomics, which allows researchers to dissect biological heterogeneity with stunning precision. Traditional “bulk” sequencing methods provided a single, averaged-out molecular signal from an entire tissue sample, effectively obscuring the critical differences between the individual cells that compose it. This is akin to trying to understand a city’s economy by looking only at its total GDP, ignoring the diverse activities of its individual businesses and residents. In contrast, single-cell analysis provides a detailed molecular profile for each cell, revealing a rich tapestry of distinct cellular states, behaviors, and vulnerabilities. In oncology, for example, this is invaluable for understanding the complex ecosystem within a single tumor, where different cancer cells may have unique drug resistance mechanisms. By identifying and characterizing these subpopulations, researchers can design combination therapies that target the entire tumor, not just its most common cell type, thereby preventing relapse and improving patient outcomes.

The Technologies Behind the Transformation

Complementing the depth of single-cell analysis is the crucial contextual information provided by spatial transcriptomics. This groundbreaking technology adds a geographical layer to the data, mapping the physical location of different cell types within a tissue. It answers not just what cells are present, but where they are and, critically, how they are interacting with their neighbors. These spatial relationships are often fundamental to disease progression and therapeutic response. For example, the success or failure of immunotherapy can depend heavily on the proximity and arrangement of immune cells relative to cancer cells within the tumor microenvironment. A tumor that is heavily infiltrated by T-cells is more likely to respond to treatment than one where immune cells are kept at the periphery. By integrating this spatial data, scientists can build a far more complete and dynamic picture of the biological battlefield. This allows for the development of more sophisticated biomarkers and therapies that are designed to modulate not just cell types, but the very architecture of the diseased tissue itself, opening up entirely new avenues for intervention.

The convergence of these advanced genomic technologies generates datasets of staggering size and complexity, often encompassing thousands of gene expression levels across millions of individual cells for a single sample. Interpreting this data is a monumental task that far exceeds the capacity of traditional statistical methods. This is where machine learning becomes indispensable. AI algorithms are uniquely suited for finding subtle, non-linear patterns within these high-dimensional datasets that would be invisible to the human eye. However, the most effective strategies involve a synergistic combination of machine learning’s pattern-recognition power with deep biological and mechanistic knowledge. It is not enough to simply feed data into an algorithm; the models must be guided by biological principles to ensure that the patterns they identify are not just statistical artifacts but represent genuine biological phenomena. This thoughtful integration of computation and biology is the cornerstone of the new research paradigm, turning a deluge of raw data into a stream of actionable biological insights that can drive the next wave of medical breakthroughs.

From Raw Data to Actionable Insights

The Power of Causal Models

The enormous volume of genomic data being generated creates a significant bottleneck between raw data collection and actionable biological insight. To overcome this, researchers are focused on developing novel computational and mathematical frameworks that move beyond simple correlation. The most significant of these is the pursuit of “causal models.” Unlike conventional machine learning or statistical approaches that excel at identifying associations—for instance, that the expression of Gene A is correlated with a particular disease state—causal models aim to uncover the underlying cause-and-effect relationships that govern cellular behavior. They seek to answer the “why” and “how”: does a change in Gene A cause the disease, or is it merely a downstream effect of another process? By understanding how genetic variations, environmental stimuli, or drug compounds directly influence cellular pathways and responses, researchers can make far more accurate predictions about a drug’s potential efficacy and toxicity. This approach holds the promise of significantly reducing the high failure rates that plague clinical trials, as it allows for the pre-emptive identification of therapeutic strategies most likely to succeed based on a deep, mechanistic understanding of the disease, rather than just statistical association.

The development of these causal models represents a crucial step toward engineering biology. If scientists can accurately model the causal chain of events that leads from a genetic mutation to a disease phenotype, they can then computationally simulate the effect of a potential drug before it ever enters a lab. This “in silico” experimentation allows for the rapid screening of thousands of potential therapeutic interventions, identifying the most promising candidates for further development while filtering out those likely to fail. This not only saves immense time and resources but also shifts drug discovery from a process of trial and error to one of rational design. By building predictive, mechanistic models of disease, the field is moving closer to a future where therapies can be designed from the ground up to target the specific causal drivers of a patient’s illness, marking a profound change in how new medicines are conceived and developed.

Building Trust with Interpretable AI

For any AI-driven insight to be successfully translated into clinical practice, it cannot operate as a “black box.” A model that makes a life-or-death recommendation without providing a clear rationale will never be fully trusted by clinicians, regulators like the FDA, or the patients themselves. Consequently, a key priority for leading research centers is the development of interpretable machine learning models. These are algorithms designed not only to make accurate predictions but also to provide transparent, understandable explanations for their reasoning. For instance, if a model predicts that a specific cancer patient will respond well to a particular immunotherapy, an interpretable version would also highlight the specific biological features—such as the presence of certain immune cell types in a particular spatial arrangement within the tumor—that led to that conclusion. This transparency is non-negotiable for real-world clinical implementation.

The value of interpretability extends far beyond building trust; it is also a powerful engine for new scientific discovery. When a model reveals the specific biological features driving its predictions, it can point researchers toward previously unknown disease mechanisms or biomarkers. This creates a virtuous cycle where the AI model not only assists in clinical decision-making but also generates new, testable hypotheses that can be taken back to the lab, deepening our fundamental understanding of biology. Interpretable models serve this dual purpose: they provide the necessary transparency for clinical translation while simultaneously functioning as discovery tools that illuminate the complex inner workings of human disease. This ensures that the integration of AI into medicine is not just about automation, but about augmenting human intelligence and accelerating the pace of biomedical innovation.

Revolutionizing Patient Care and Research

The Ultimate Goal Truly Personalized Medicine

One of the most promising applications of this integrated approach is the realization of truly personalized medicine. The overarching vision is to create unified computational frameworks capable of integrating a vast array of patient-specific data, including genomic sequences, gene expression profiles from single-cell analysis, protein levels, clinical history, lifestyle factors, and treatment outcomes. By analyzing this multi-modal data in its entirety, these sophisticated models can generate a holistic, dynamic profile of an individual’s health and disease. The goal is to move beyond treating diseases based on broad population averages and instead predict which therapies are most likely to be effective for a specific person, at a specific point in time. This tailored approach could identify the optimal chemotherapy regimen for a cancer patient based on their tumor’s unique genetic makeup or select the most effective antidepressant by analyzing an individual’s neuro-genomic profile, finally moving away from the often inefficient one-size-fits-all model of treatment.

Achieving this ambitious vision requires extensive, cross-disciplinary collaboration. It is not a task that can be solved by computational scientists alone. Success depends on a deep partnership between algorithm developers, experimental biologists who can validate the models’ predictions in the lab, clinicians who provide invaluable real-world context and patient data, and the patients themselves, who are essential partners in the research process. Building these integrated solutions demands the creation of new institutional structures and educational programs that break down traditional academic silos and foster a shared language between these diverse fields. The solutions developed must address genuine clinical needs and be seamlessly integrated into existing healthcare workflows to ensure they provide tangible benefits. This collaborative ecosystem is the foundation upon which the future of personalized medicine will be built, ensuring that technological advances translate into improved health outcomes for all.

Fixing Foundational Challenges

Beyond developing new therapies, this new computational paradigm can help solve long-standing systemic issues that have hindered progress in the biomedical field. One of the most significant is the “reproducibility crisis,” where findings from one lab often cannot be replicated by another. By promoting the use of standardized analytical pipelines and championing an open-science model that mandates the sharing of both data and the code used to analyze it, rigorous computational methods can dramatically enhance the transparency and reproducibility of scientific findings. When other researchers can access and re-run the original analysis, it becomes far easier to validate results, build upon previous work, and identify potential errors. This commitment to openness and rigor helps build a more reliable and cumulative body of scientific knowledge, accelerating progress across the entire field.

At the same time, the use of sensitive human genomic data raises significant ethical and privacy concerns. Protecting patient data is paramount, but it must be balanced with the need to analyze large datasets to make scientific discoveries. To address this challenge, researchers are actively developing and deploying privacy-preserving machine learning techniques. One prominent example is federated learning. This innovative approach allows a machine learning model to be trained on datasets that are distributed across different hospitals or research institutions without requiring the raw, sensitive data to ever be centralized in one place. Instead, the model “travels” to the data, learns from it locally, and then shares only the aggregated, anonymized insights back to a central server. This method protects individual patient privacy while still enabling the kind of large-scale, collaborative research needed to tackle the world’s most complex diseases, demonstrating that scientific advancement and data security can go hand in hand.

Laying the Groundwork for a New Generation

Infrastructure for a Data-Intensive World

This data-intensive model of research demanded a robust supporting infrastructure capable of handling information on a petabyte scale. Significant investments were made in high-performance computing clusters, cloud platforms, and specialized storage solutions designed to manage and process these massive datasets. Beyond the physical hardware, a major focus was placed on developing highly efficient algorithms. These computational tools were optimized to maximize analytical power while minimizing the computational cost, a critical step toward democratizing access to these advanced methods for labs and institutions with fewer resources. Furthermore, the success of this new scientific field depended on training a new generation of scientists who were fluent in the languages of both computational and biological disciplines. Educational programs and institutional structures were redesigned to break down traditional academic silos, fostering the kind of interdisciplinary collaboration that became the bedrock of modern biomedical discovery. This integrated environment for research and training proved essential for turning computational potential into clinical reality.

From the Lab to the Clinic

Looking back, it became clear that the acceleration of both data generation and analytical power marked a turning point. Emerging technologies like long-read sequencing and multi-omics profiling provided an even more detailed view of cellular function, while advances in AI, including foundation models trained on vast biological datasets, unlocked new levels of predictive accuracy. The ultimate ambition of this scientific revolution was to translate these technological and scientific advances into direct clinical impact. This concerted effort led to the rapid development of highly targeted therapies, the ability to accurately predict patient responses to treatment, and even the early detection of diseases long before symptoms appeared. The successful integration of machine learning with high-resolution genomic data was not merely an incremental technical advance; it was a transformative shift that fundamentally reshaped how scientists understood and combated human disease, paving the way for a healthier future.