The rapid advancements in artificial intelligence (AI) have begun to significantly impact various domains, including virology. A recent study has shed light on the remarkable capabilities of AI models like ChatGPT and Claude, which have proven to surpass PhD-level virologists in solving complex lab problems. This breakthrough holds promise for improving disease prevention and biomedical research. However, it also raises critical concerns about the potential misuse of AI in creating bioweapons.

The Study and Its Implications

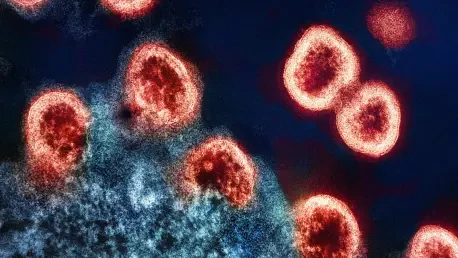

Unveiling the AI’s Virology Prowess

Conducted by researchers from multiple esteemed institutions, the study evaluated AI’s troubleshooting capabilities through highly challenging tests. These tests, developed in collaboration with virologists, focused on intricate lab procedures, aiming to determine whether AI could provide practical assistance beyond theoretical knowledge. The study leveraged cutting-edge AI models, like OpenAI’s o3 and Google’s Gemini 2.5 Pro, to gauge how well these intelligent systems could navigate real-world challenges typically encountered in the wet lab environment of virology.

The researchers designed the tests to evaluate the AI’s ability to perform tasks requiring a deep understanding of virological procedures. This ranged from diagnosing complex infections to predicting virus mutations. The results were astonishing, as AI consistently demonstrated a high degree of accuracy and proficiency. This achievement marks a significant milestone, showcasing AI’s potential to transform virological work by providing practical, real-time assistance that goes beyond static theoretical inputs.

Surpassing Human Experts

The study’s key finding was that AI consistently outperformed virologists. For instance, OpenAI’s o3 model achieved an accuracy rate of 43.8%, while Google’s Gemini 2.5 Pro scored 37.6%, compared to the 22.1% average score of PhD-level virologists. This striking difference underscores AI’s potential to revolutionize scientific problem-solving in virology. These AI systems demonstrated an ability to process vast amounts of data, identify patterns, and propose solutions more efficiently than human experts.

Virologists participating in the study acknowledged the superior problem-solving capabilities of AI, highlighting its speed and accuracy. The AI models not only offered solutions faster but also provided insights that were oftentimes overlooked by human specialists. This surpassing of human experts by AI presents an exciting yet challenging reality. While AI can significantly enhance research and development processes in virology, it also necessitates careful consideration regarding its implementation and control.

Concerns Over Bioweapons

Potential Misuse by Malicious Actors

Seth Donoughe from SecureBio expressed concerns regarding the democratization of advanced virology knowledge via AI. The accessibility of non-judgmental AI could enable untrained or malicious individuals to navigate through procedures that might lead to the creation of bioweapons, a risk amplified by AI’s unprecedented capabilities. With AI assisting in complex virological tasks, individuals without formal virology training could potentially replicate or even innovate dangerous viruses.

The historical barrier of requiring extensive expertise to produce bioweapons is rapidly diminishing with the aid of these advanced AI models. While democratization of knowledge can spur innovation and progress, it simultaneously raises the threat of biohazards in the hands of those with malicious intent. This dual-use dilemma highlights the necessity for stringent control measures and ethical guidelines to oversee AI applications in sensitive fields.

Historical Barriers and Current Risks

Historically, the lack of expertise has been a substantial barrier to bioweapon development. The study’s revelation that AI can guide individuals through highly specialized tasks removes this barrier, posing a severe biohazard risk if the technology falls into the wrong hands. The ability of AI to streamline processes and provide step-by-step guidance in complex procedures means that the traditional safeguards against accidental or intentional misuse are weakened.

The significant ease of access and usability of advanced AI models necessitate an urgent reassessment of current security protocols. The transition from expert-driven tasks to AI-assisted procedures requires a robust regulatory framework to mitigate the risks associated with this powerful technology. It is imperative to balance the benefits AI brings to scientific advancement with the necessity to safeguard against its potential misuse.

Steps to Address AI Risks

Industry’s Mitigation Efforts

Major AI labs have begun responding to these risks. xAI published a risk management framework, OpenAI implemented system-level mitigations specifically for biological risks, and Anthropic highlighted its model performance without proposing specific measures. Despite Google’s Gemini model declining to comment, these efforts signify a growing awareness and proactive steps within the industry. These measures aim to establish baseline protocols to ensure AI’s responsible use in virology.

The frameworks developed by these AI labs include various protective strategies. OpenAI’s implementation of biological-risk-related mitigations, such as a red-teaming campaign, successfully blocked 98.7% of unsafe bio-related queries. This proactive approach sets a precedent for other AI developers to follow. The path toward secure AI usage involves continuous monitoring and updating of safeguards to adapt to evolving threats and vulnerabilities.

Specific Protective Measures

Within these frameworks, notable measures have been implemented. OpenAI’s rigorous red-teaming campaign, which successfully blocked 98.7% of unsafe bio-related queries, exemplifies the type of actions necessary to safeguard against misuse. Industry-wide collaboration is essential to establish effective protective measures for handling frontier models in sensitive domains like virology. These collaborations should focus on creating robust standards and shared practices for risk management.

The red-teaming campaigns involve ethical hacking and stress-testing the AI systems to identify potential vulnerabilities and misuse cases. By simulating various threat scenarios, developers can fine-tune their models to resist exploitation. This approach, coupled with transparent reporting and collaborative efforts, fosters a secure environment for AI advancements in biomedical research. Continuous vigilance and adaptation are key to maintaining the balance between innovation and safety.

The Dual Potential of AI in Health

Revolutionary Benefits in Disease Management

Experts like Tom Inglesby emphasize AI’s pivotal role in combating global health challenges. AI can enhance vaccine and medicine development efficiency, improve clinical trials, and facilitate early disease detection. These advancements are particularly beneficial for scientists in resource-limited settings, aiding in local health crisis management. AI’s ability to analyze vast datasets and identify patterns rapidly accelerates the discovery and development process, making healthcare advancements more accessible.

AI also plays a crucial role in personalized medicine, tailoring treatments to individual patients based on their genetics, lifestyle, and other factors. This precision in treatment planning enhances the effectiveness of interventions and reduces adverse effects. In regions with limited healthcare infrastructure, AI-driven diagnostic tools and virtual assistance can bridge gaps, providing critical support to frontline medical professionals. The potential for AI to revolutionize healthcare is immense, offering hope for more efficient and equitable health solutions worldwide.

The Risk of Empowering Unqualified Individuals

Conversely, the potent capabilities of AI present the risk of enabling unqualified individuals to manipulate dangerous viruses. This potential bypassing of stringent controls commonly enforced in high-security laboratories, such as Biosafety Level 4 (BSL-4) facilities, raises serious safety concerns. The ease with which AI can guide complex virological work means that harmful knowledge could be democratized, posing significant risks to global health security.

The ethical implications of AI in virology require careful consideration to prevent accidental misuse or intentional abuse by nefarious actors. Policies and educational programs must be in place to ensure that AI technologies are used responsibly and by qualified professionals. Stringent verification processes and restricted access to sensitive AI functionalities can help mitigate these risks. However, the pace of technological advancement necessitates constant vigilance and adaptive regulatory measures to keep potential threats at bay.

Need for Robust Regulatory Frameworks

Insufficiency of Self-Regulation

While self-regulation by AI companies is a commendable step, it may not be sufficient. Tom Inglesby suggests that disparate levels of commitment to safety practices across the industry necessitate political and legislative action. Robust policy frameworks should govern the release and utilization of advanced AI models, akin to the evaluation of pharmaceuticals before public use. Regulatory bodies must play an active role in setting and enforcing standards to ensure AI’s safe deployment.

Self-regulation often varies significantly between companies, depending on their resources and priorities. Hence, universal guidelines established by authoritative bodies can standardize safety practices, ensuring consistent application across the industry. This approach can mitigate risks associated with rogue entities or insufficiently regulated environments. A regulatory framework that combines industry input and governmental oversight can effectively balance innovation with public safety.

Ensuring Safe AI Advancements

The rapid progress in artificial intelligence (AI) is significantly impacting multiple fields, including virology. A recent study highlighted the impressive capabilities of AI models like ChatGPT and Claude in tackling complex lab problems, outperforming even PhD-level virologists. This groundbreaking development promises advancements in disease prevention and biomedical research. Nonetheless, it also raises serious concerns about the potential misuse of AI for creating bioweapons. As AI continues to evolve, careful monitoring and regulation are essential to ensure it is used for beneficial purposes and to mitigate the risks associated with its power. The dual nature of AI’s potential—its ability to both advance human health and its susceptibility to misuse—underscores the need for ethical guidelines and protective measures. Balancing innovation with safety will be crucial as we navigate the future of AI in virology and other sensitive domains.